Configure Kerberos authentication based on FreeIPA via ADCM

Overview

To kerberize a cluster using FreeIPA, follow the steps below:

-

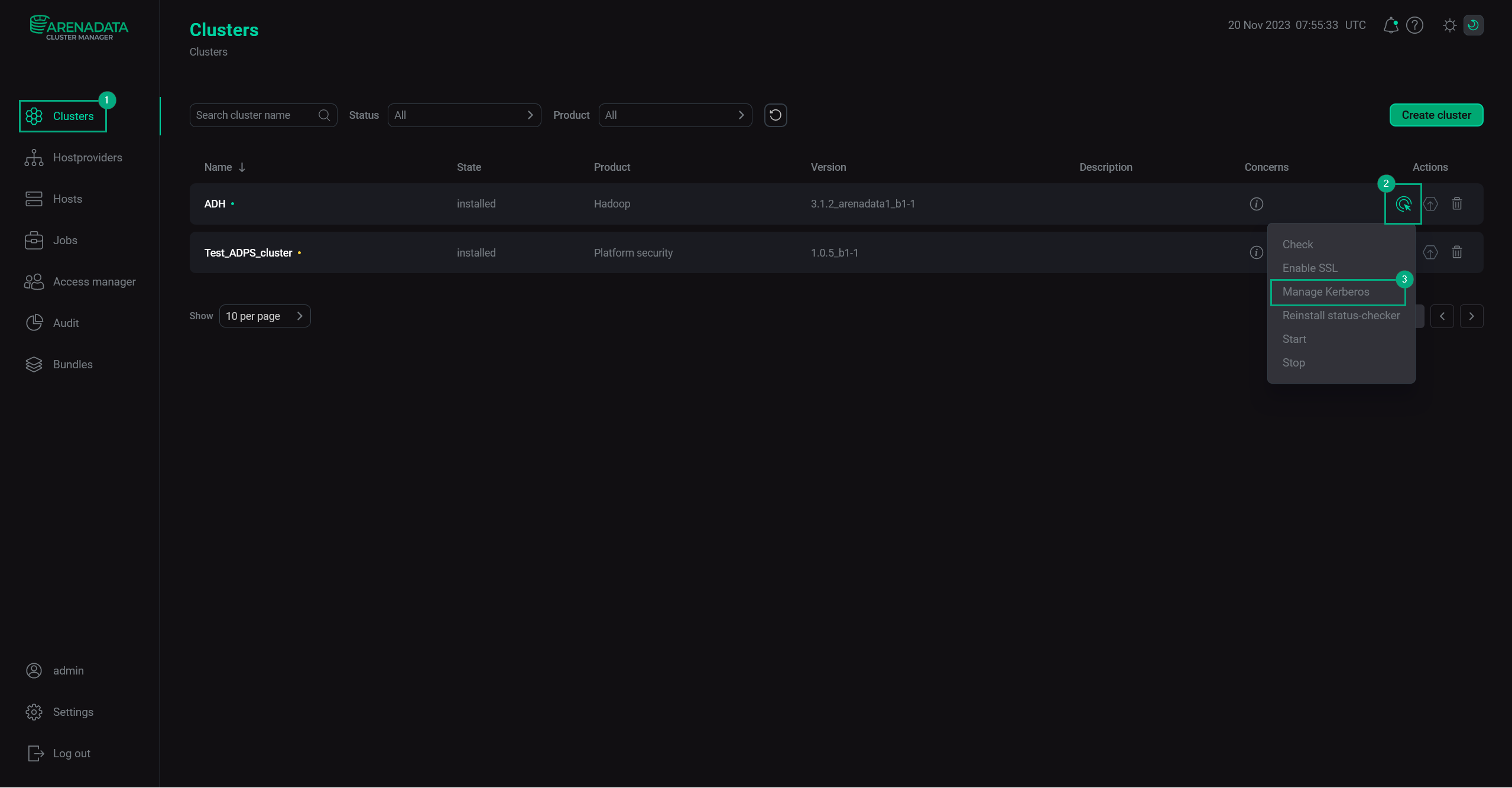

In ADCM web UI, go to the Clusters page. Select an installed and prepared ADH cluster, and run the Manage Kerberos action.

Manage Kerberos

Manage Kerberos -

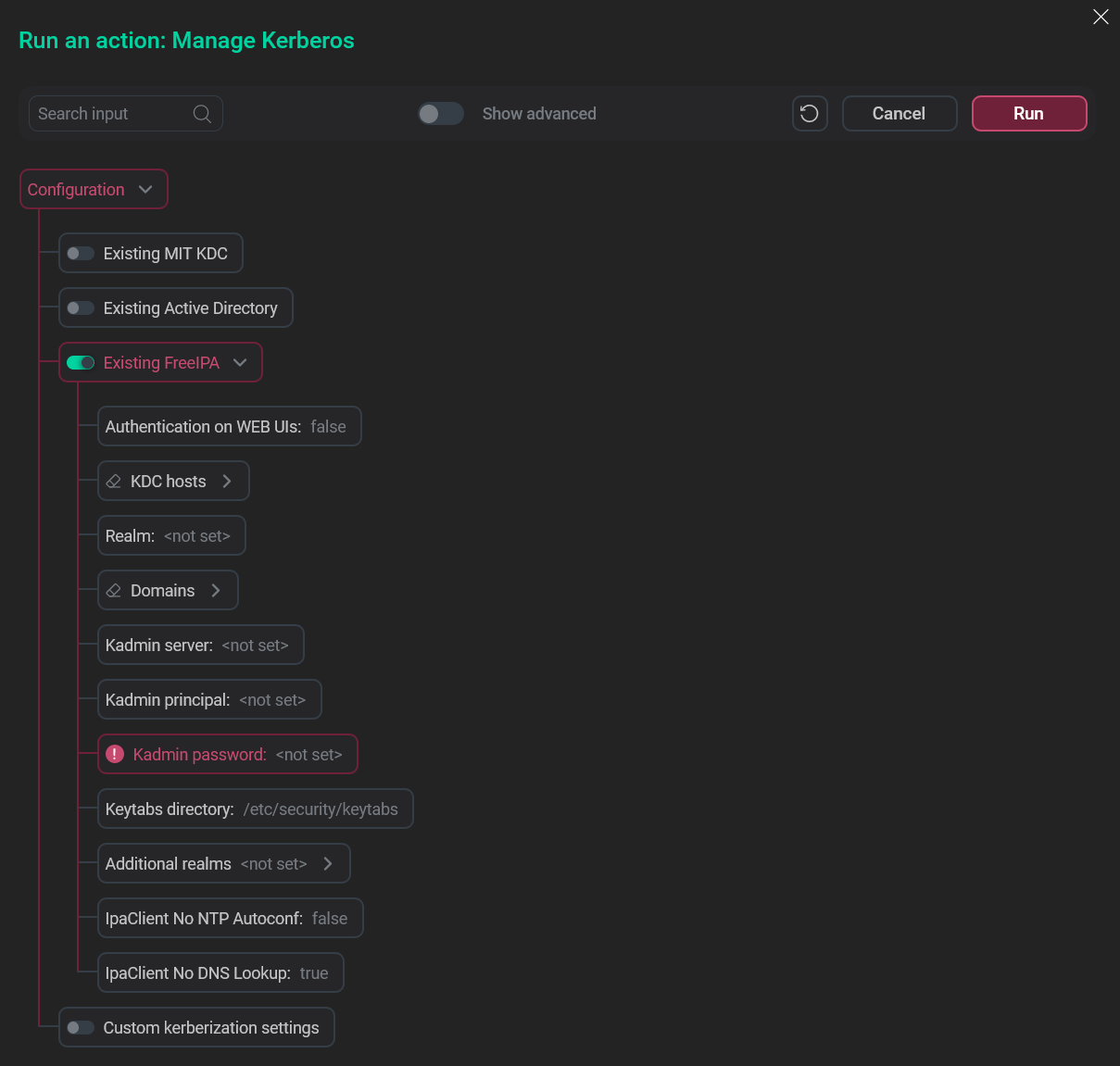

In the pop-up window, turn on the Existing FreeIPA option.

Choose the relevant option

Choose the relevant option -

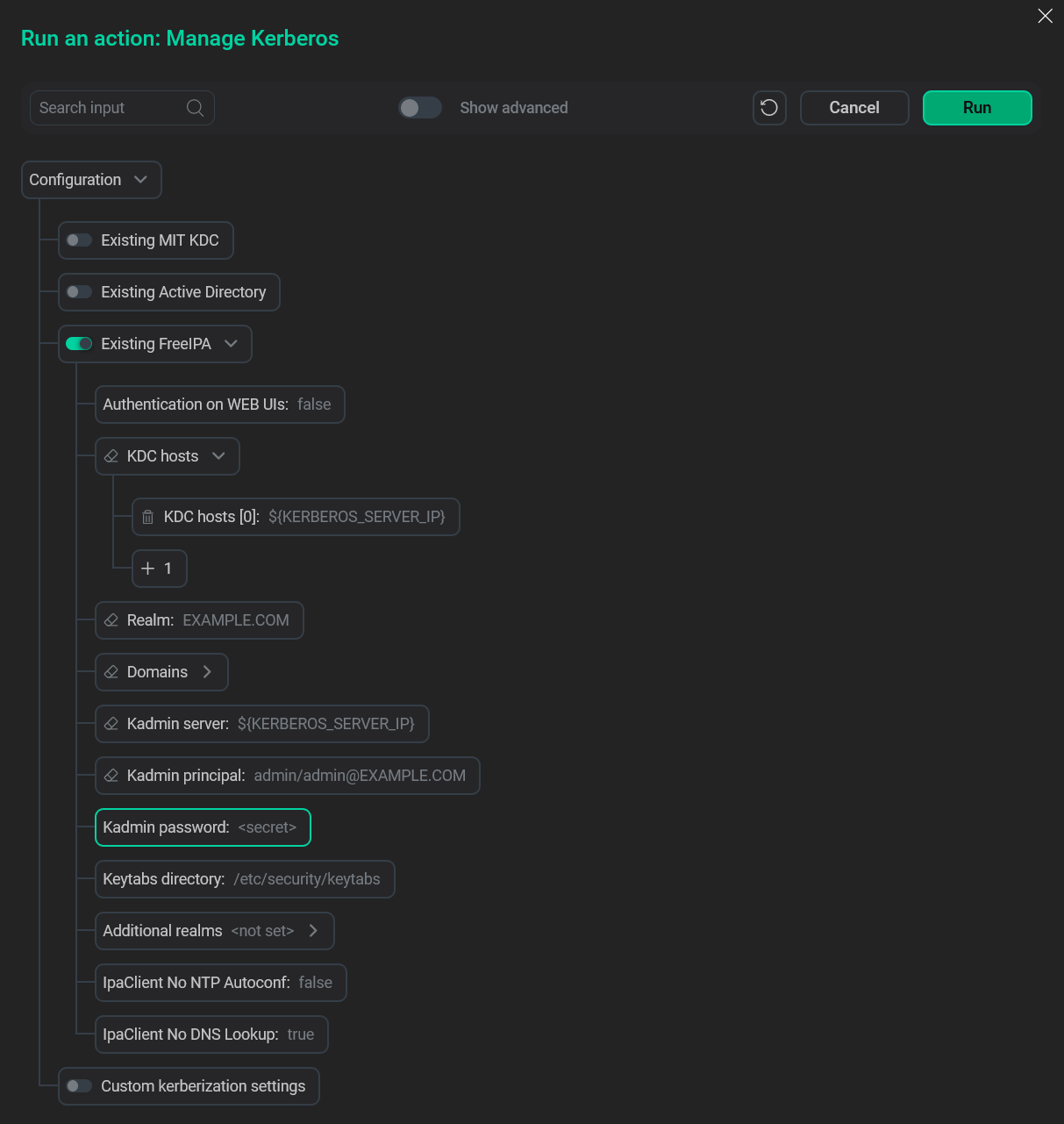

Fill in the FreeIPA Kerberos parameters.

FreeIPA Kerberos fields

FreeIPA Kerberos fields -

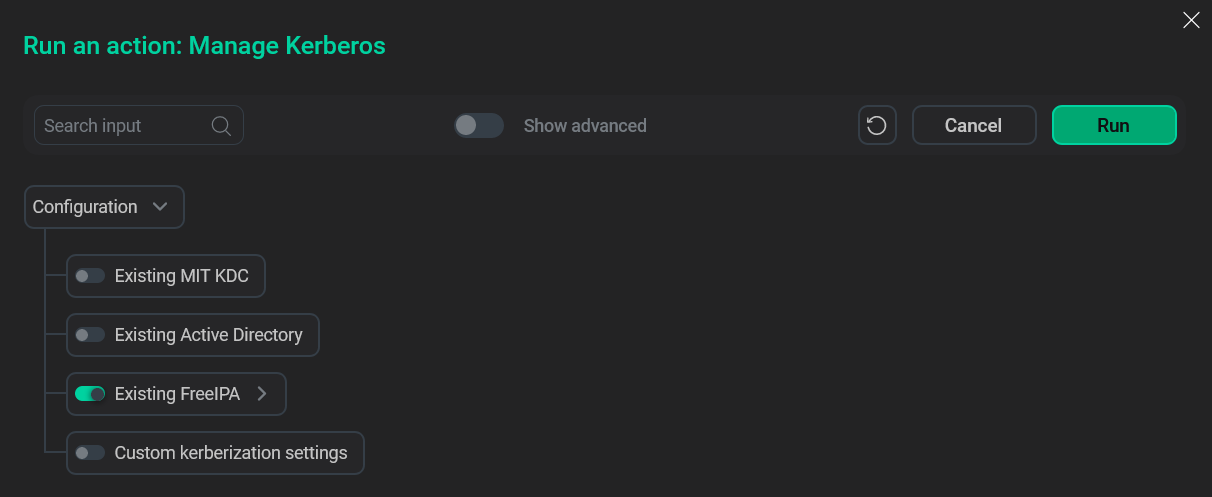

Click Run, wait for the job to complete and proceed to setting up Kerberos in the cluster.

Run the action

Run the action

FreeIPA Kerberos parameters

The following parameters are required to kerberize your ADH cluster with FreeIPA.

You can get values for these by running ipa user-find <ipa_admin> on your FreeIPA server, where <ipa_admin> is your IPA Admin user.

| Parameter | Description |

|---|---|

Authentication on WEB UIs |

Enables Kerberos authentication on Web UIs |

KDC hosts |

One or more KDC hosts with running FreeIPA server(s). Only FQDN is acceptable |

Realm |

A Kerberos realm to connect to the FreeIPA server |

Domains |

One or more domains associated with FreeIPA |

Kadmin server |

A host where |

Kadmin principal |

A principal name used to connect via |

Kadmin password |

An IPA Admin password |

Keytabs directory |

Directory of the keytab file that contains one or several principals along with their keys |

Additional realms |

Additional Kerberos realms |

IpaClient No NTP Autoconf |

Disables the NTP configuration during the IPA client installation |

IpaClient No DNS Lookup |

Disables the DNS lookup for FreeIPA server during the IPA client installation |

Number of retries for kinit invocation attempts |

Number of retries for kinit invocation attempts |

Check FreeIPA integration

After a successful kerberization, the hadoop commands can only run on cluster nodes after getting a Kerberos ticket. Below are examples that demonstrate cluster access attempts for an unsecured cluster and for a cluster kerberized with FreeIPA.

HDFS access before the cluster is kerberized

List the HDFS root contents:

$ hdfs dfs -ls /The output is as follows:

Found 4 items drwxrwxrwt - yarn hadoop 0 2023-01-31 16:46 /logs drwxr-xr-x - hdfs hadoop 0 2023-01-31 16:43 /system drwxrwxrwx - hdfs hadoop 0 2023-01-31 21:46 /tmp drwxr-xr-x - hdfs hadoop 0 2023-01-31 17:00 /user

The curl way to list HDFS contents via HttpFS:

$ curl "http://ka-adh-2.ru-central1.internal:14000/webhdfs/v1/?op=LISTSTATUS&user=admin"The response is as follows:

{"FileStatuses":{"FileStatus":[{"pathSuffix":"logs","type":"DIRECTORY","length":0,"owner":"yarn","group":"hadoop","permission":"1777","accessTime":0,"modificationTime":1675183594965,"blockSize":0,"replication":0},...

HDFS access after enrolling FreeIPA

List the HDFS root contents:

$ hdfs dfs -ls /Sample output (access denied):

2023-02-01 21:15:51,819 WARN ipc.Client: Exception encountered while connecting to the server : org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS] 2023-02-01 21:15:51,831 WARN ipc.Client: Exception encountered while connecting to the server : org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS] 2023-02-01 21:15:51,832 INFO retry.RetryInvocationHandler: java.io.IOException: DestHost:destPort ka-adh-3.ru-central1.internal:8020 , LocalHost:localPort ka-adh-1.ru-central1.internal/10.92.17.141:0. Failed on local exception: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS], while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over ka-adh-3.ru-central1.internal/10.92.16.90:8020 after 1 failover attempts. Trying to failover after sleeping for 518ms ...

To pass the authentication, the UNIX user who submits the command hdfs dfs … (admin in this scenario) should obtain a corresponding Kerberos ticket.

$ kinit adminAfter getting a ticket, the command hdfs dfs -ls / prints the HDFS root contents indicating that the authentication was successful:

Found 4 items drwxrwxrwt - yarn hadoop 0 2023-01-31 16:46 /logs drwxr-xr-x - hdfs hadoop 0 2023-01-31 16:43 /system drwxrwxrwx - hdfs hadoop 0 2023-01-31 21:46 /tmp drwxr-xr-x - hdfs hadoop 0 2023-01-31 17:00 /user

HDFS request:

$ curl "http://ka-adh-2.ru-central1.internal:14000/webhdfs/v1/?op=LISTSTATUS&user=admin"Response (access denied):

<html> <head> <meta http-equiv="Content-Type" content="text/html;charset=utf-8"/> <title>Error 401 Authentication required</title> </head> <body><h2>HTTP ERROR 401</h2> <p>Problem accessing /webhdfs/v1/. Reason: <pre> Authentication required</pre></p> </body> </html>

To pass the authentication, you have to get a Kerberos ticket for the user who runs curl (admin in this scenario):

$ kinit adminHDFS request:

$ curl --negotiate -u : "http://ka-adh-2.ru-central1.internal:14000/webhdfs/v1/?op=LISTSTATUS&user=admin"|

TIP

The --negotiate flag enables SPNEGO to allow curl access to a Kerberos-protected resource.

|

Response (successful):

{"FileStatuses":{"FileStatus":[{"pathSuffix":"logs","type":"DIRECTORY","length":0,"owner":"yarn","group":"hadoop","permission":"1777","accessTime":0,"modificationTime":1675183594965,"blockSize":0,"replication":0},{"pathSuffix":"system","type":"DIRECTORY","length":0,"owner":"hdfs","group":"hadoop","permission":"755","accessTime":0,"modificationTime":1675183407542,"blockSize":0,"replication":0},...