YARN high availability overview

ResourceManager (RM) is a YARN component responsible for allocating resources like CPU/RAM in a Hyperwave cluster and scheduling applications such as MapReduce jobs.

Two or more RMs can work in the high availability (HA) mode to provide uninterrupted operation even when a cluster node with one RM goes down. When an RM becomes unreachable, one of the standby RM instances automatically becomes active based on the election algorithm.

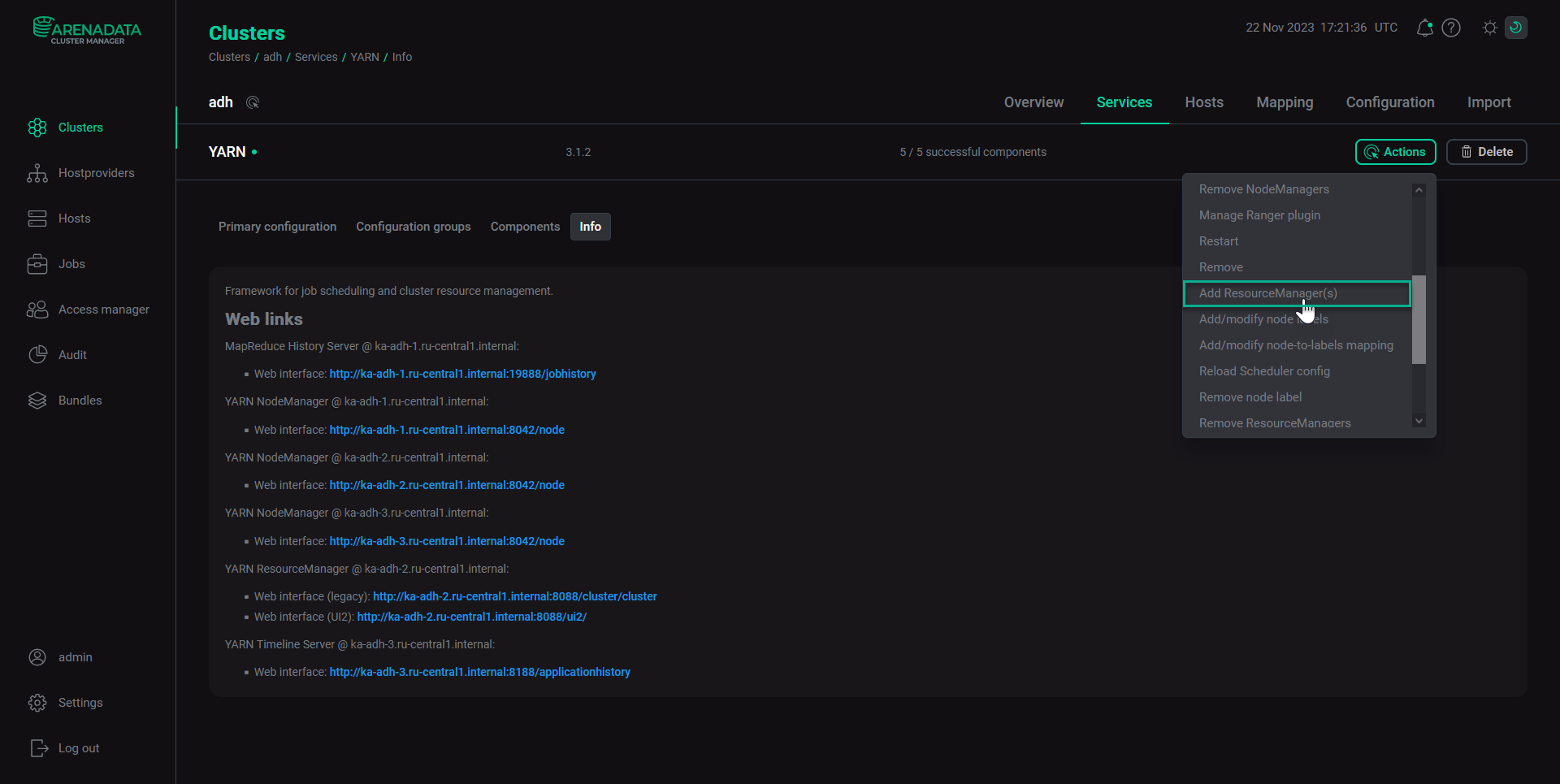

Starting ADH 2.1.10.b1 no explicit actions are required to enable HA for ResourceManagers. The HA mode activates automatically when you add two or more YARN ResourceManager components using the ADCM UI as shown below.

ResourceManager HA requires ZooKeeper and HDFS services to be running.

Architecture

The ResourceManager HA mode follows the active-standby approach, that is, at any given time one RM is active and one or more RMs are in the standby state, waiting to replace the active RM, if needed. Switching from standby to active occurs automatically through the integrated failover controller.

Automatic failover

By default, the system automatically switches from the failed to a standby RM in case of failures. During the failover, there is a need to ensure that no split-brain scenario occurs.

A split-brain scenario is a situation where multiple RMs assume they have the active role. This is undesirable because multiple RMs could proceed to manage some cluster nodes in parallel. This would cause inconsistency in resource management and job scheduling, potentially leading the entire system to a failure.

Thus, the goal is to ensure that at any given time there is one and only one active RM in the system. For this, ZooKeeper can be used to coordinate the failover process using the Leader Election algorithm described in the next section.

Leader Election algorithm

For better understanding of the election process, it might be useful to revise key ZooKeeper concepts.

A ZooKeeper client (a user, a service, or an application) can create Znodes of two types:

-

Ephemeral. An ephemeral Znode gets deleted if the session in which the Znode was created has disconnected. For example, if RM1 has created an ephemeral Znode

eph_znode, this Znode gets deleted as soon as the RM1’s session with ZooKeeper is closed. -

Persistent. A persistent Znode exists within ZooKeeper until it is deleted. Unlike ephemeral Znodes, a persistent Znode is not affected by the existence of the process that has created it. For example, if RM1 creates a persistent Znode, then even if the RM1’s session with ZooKeeper gets disconnected, the persistent Znode created by RM1 still exists and is not deleted upon RM1 going down. This Znode can be removed only when it is deleted explicitly.

For more details about ZooKeeper architecture and data model, see ZooKeeper architecture.

Election algorithm

Suppose there is a cluster with two RMs (RM1 and RM2) competing to become active.

This race is resolved as follows: the RM that first succeeds in creating an ephemeral Znode named ActiveStandbyElectorLock gets the active role.

It is important that only one RM is able to create the Znode named ActiveStandbyElectorLock.

If RM1 was the first to create the ActiveStandbyElectorLock Znode, RM2 will no longer be able to create the same Znode.

Thus, RM1 wins the election and becomes active, while RM2 gets the standby role.

Before going to the standby mode, RM2 creates a watch for the ActiveStandbyElectorLock Znode to get notifications about the Znode’s update/remove events.

If RM1 goes down, the ActiveStandbyElectorLock Znode gets deleted automatically due to being ephemeral.

Then, the standby RM2 becomes active.

RM2 gets notified about the removal of the Znode that it has been watching, so it tries to create its own ActiveStandbyElectorLock ephemeral Znode, succeeds, and finally gets the active role.

Sometimes RM gets disconnected only temporarily and reconnects back to ZooKeeper. However, ZooKeeper cannot determine the cause of RM disconnect, and hence it deletes the ephemeral Znodes created by that RM. This can lead to a split-brain scenario, where both the RMs get the active role.

To avoid this, RM2 reads the information about the active RM from the persistent ActiveBreadCrumb Znode, where RM1 has already written its information (hostname and TCP port).

Once RM2 knows that RM1 is alive, it tries to make RM1 fenced.

On success, RM2 overwrites the data in the persistent ActiveBreadCrumb Znode with its own configuration (RM2’s hostname and TCP port), since RM2 is now the active RM.

Client, ApplicationMaster, and NodeManager on RM failover

When there are multiple RMs, the configuration in the yarn-site.xml file used by clients and nodes must list all these RMs.

Clients, ApplicationMasters, and NodeManagers try connecting to the RMs in a round-robin order until they hit the active RM. If the active RM goes down, they resume the round-robin polling until they hit the "new" active RM. The failover policy for them is defined by one of the following properties:

-

When HA is used, the retry logic is specified by the

yarn.client.failover-proxy-providerproperty that defaults toorg.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider. You can override the logic by providing a custom class that implementsorg.apache.hadoop.yarn.client.RMFailoverProxyProviderand setting the property value to this class name. -

When running in the non-HA mode, the retry logic is specified by the

yarn.client.failover-no-ha-proxy-providerproperty.