Configure and use GPU on YARN

Overview

For computationally intensive tasks, Hadoop can be configured to run YARN applications on GPU instead of CPU.

Prerequisites for using GPU on YARN:

-

An Nvidia GPU.

-

Nvidia drivers.

-

If Docker is used, nvidia-docker 1.0 has to be installed.

-

YARN uses Capacity scheduler.

GPU configuration

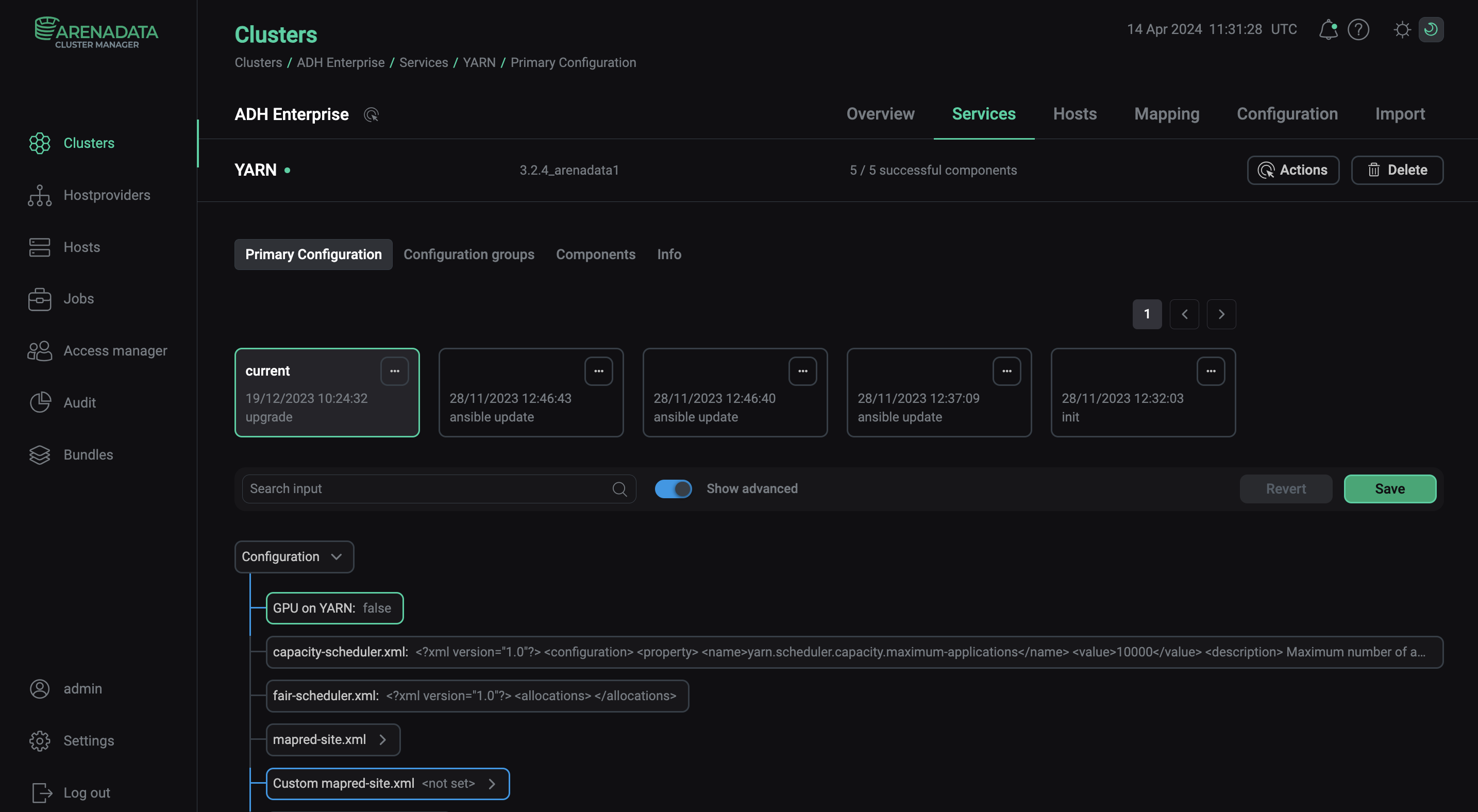

To configure the GPU support on YARN via ADCM:

-

On the Clusters page, select the desired cluster.

-

Navigate to Services and click at YARN.

-

Toggle the Show advanced option, find the GPU on YARN setting and set it to

true. GPU on YARN setting in ADCM

GPU on YARN setting in ADCM -

Find and click at the capacity-scheduler.xml property.

-

In the opened window, change the

yarn.scheduler.capacity.resource-calculatorproperty value toorg.apache.hadoop.yarn.util.resource.DominantResourceCalculator:<configuration> <property> <name>yarn.scheduler.capacity.resource-calculator</name> <value>org.apache.hadoop.yarn.util.resource.DominantResourceCalculator</value> </property> </configuration> -

In the yarn-site.xml parameter group, edit the properties to create the desired configuration for your cluster that would account for the GPU usage. For example:

<configuration> <property> <name>yarn.nodemanager.resource-plugins</name> <value>yarn.io/gpu</value> </property> <property> <name>yarn.resource-types</name> <value>yarn.io/gpu</value> </property> <property> <name>yarn.nodemanager.resource-plugins.gpu.allowed-gpu-devices</name> <value>auto</value> </property> <property> <name>yarn.nodemanager.resource-plugins.gpu.path-to-discovery-executables</name> <value>/usr/bin</value> </property> <property> <name>yarn.nodemanager.linux-container-executor.cgroups.mount</name> <value>true</value> </property> <property> <name>yarn.nodemanager.linux-container-executor.cgroups.mount-path</name> <value>/sys/fs/cgroup</value> </property> <property> <name>yarn.nodemanager.linux-container-executor.cgroups.hierarchy</name> <value>yarn</value> </property> <property> <name>yarn.nodemanager.container-executor.class</name> <value>org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor</value> </property> <property> <name>yarn.nodemanager.linux-container-executor.group</name> <value>yarn</value> </property> </configuration> -

Find the container-executor.cfg template parameter and add the following values to the template:

yarn.nodemanager.linux-container-executor.group=yarn #--Original container-exectuor.cfg Content-- [gpu] module.enabled=true [cgroups] root=/sys/fs/cgroup yarn-hierarchy=yarnIf you need to run GPU applications under Docker environment, add these additional properties to container-executor.cfg template:

[docker] docker.allowed.devices=/dev/nvidiactl,/dev/nvidia-uvm,/dev/nvidia-uvm-tools,/dev/nvidia1,/dev/nvidia0 (1) docker.allowed.volume-drivers (2) docker.allowed.ro-mounts=nvidia_driver_375.66 (3) docker.allowed.runtimes=nvidia (4)1 Adds GPU devices to the Docker section. Enter the list of values separated by comma. You can find the list of devices using the ls /dev/nvidia*command.2 Adds nvidia-dockerto volume-driver whitelist.3 Adds nvidia_driver_<version>to read-only mounts whitelist.4 To use nvidia-docker-v2 as the GPU Docker plugin, add nvidiato runtimes whitelist. -

Click Apply and confirm changes to YARN configuration by clicking Save.

-

In the Actions drop-down menu, select Restart, make sure the Apply configs from ADCM option is set to

trueand click Run. This will automatically update all the necessary configuration files on all hosts.

|

NOTE

In some cases, it might be required to share the NodeManager’s local directory to all users to avoid permission-related errors. |

Depending on your cluster configuration, some of the parameters can be optional for using GPUs and can be set to default values.

| Parameter | Description | Default value |

|---|---|---|

yarn.nodemanager.resource-plugins |

Set to |

— |

yarn.nodemanager.resource-plugins.gpu.allowed-gpu-devices |

A list of comma-separated GPU devices in the following format: |

auto |

yarn.nodemanager.resource-plugins.gpu.path-to-discovery-executables |

Path to the GPU discovery binary used by NodeManagers for gathering the information about GPUs if |

— |

yarn.nodemanager.resource-plugins.gpu.docker-plugin |

The Docker command plugin for GPU. By default, the Nvidia Docker v1.0 is used |

nvidia-docker-v1 |

yarn.nodemanager.resource-plugins.gpu.docker-plugin.nvidia-docker-v1.endpoint |

The Nvidia Docker plugin endpoint |

http://localhost:3476/v1.0/docker/cli |

yarn.nodemanager.linux-container-executor.cgroups.mount |

Use the CGroup devices controller to do per-GPU device isolation |

true |

Use GPU on YARN

Here are the examples of running YARN applications using the yarn jar command in a YARN or Docker container.

To run an application in a YARN container, run:

$ yarn jar <path/to/hadoop-yarn-applications-distributedshell.jar> \

-jar <path/to/hadoop-yarn-applications-distributedshell.jar> \

-shell_command /usr/local/nvidia/bin/nvidia-smi \

-container_resources memory-mb=3072,vcores=1,yarn.io/gpu=2 \

-num_containers 2This command requests 2 containers, 3GB each, 1 vCore, and 2 GPUs.

To run an application in a Docker container, run:

$ yarn jar <path/to/hadoop-yarn-applications-distributedshell.jar> \

-jar <path/to/hadoop-yarn-applications-distributedshell.jar> \

-shell_env YARN_CONTAINER_RUNTIME_TYPE=docker \

-shell_env YARN_CONTAINER_RUNTIME_DOCKER_IMAGE=<docker-image-name> \

-shell_command nvidia-smi \

-container_resources memory-mb=3072,vcores=1,yarn.io/gpu=2 \

-num_containers 2This command requests 2 containers, 3GB each, 1 vCore, and 2 GPUs.