Add HDFS data directories

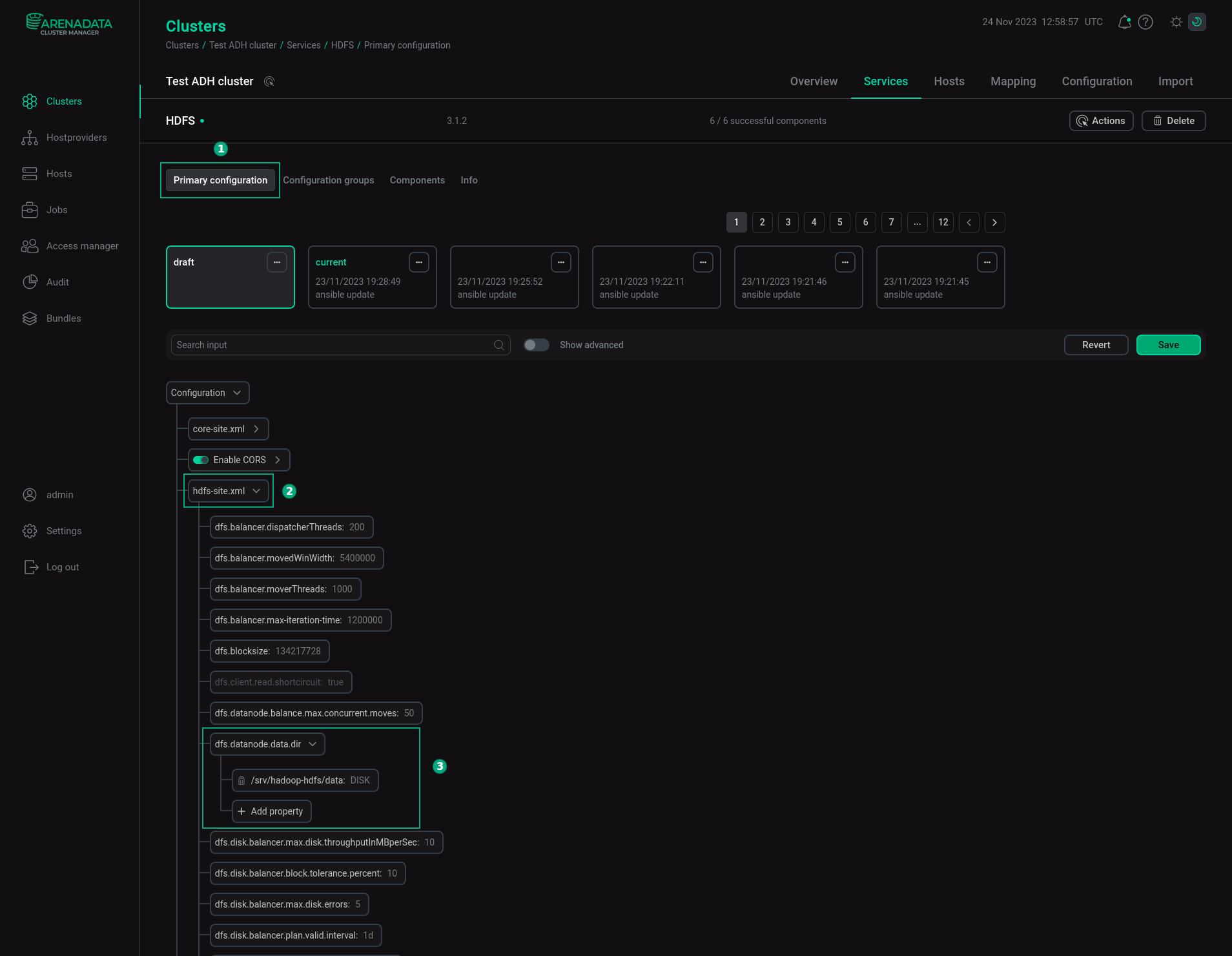

HDFS data directories are directories where DataNodes store their data blocks. These directories can be located on different devices, for example, on mounted physical disks. You can add local data directories in ADCM UI. Open the HDFS service of the ADH cluster. On the Primary configuration tab, in the configuration parameters tree, find the hdfs-site.xml node, expand it, and find the dfs.datanode.data.dir parameter among the nested elements.

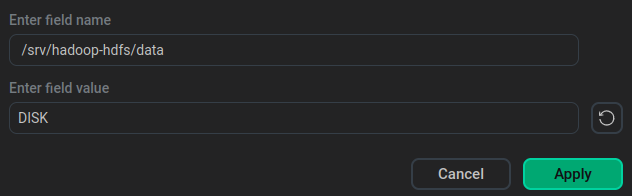

Click the Add property nested element to add a new data directory. In the dialog window, specify the path, for example: /srv/hadoop-hdfs/data, and one of the following storage types: SSD, DISK, ARCHIVE, or RAM_DISK.

Click Apply to apply the settings. The item with the new data directory will be added to the configuration tree.

You also need to perform the steps below:

-

Before you add a new value to the

dfs.datanode.data.dirparameter, you must set the owner and permissions on all DataNodes as follows:$ chown -R hdfs:hadoop /path/to/data/dir $ chmod -R 755 /path/to/data/dirWhere

/path/to/data/diris the path to the data directory or the path in the local file system where this directory should be created. -

Data directories must have the same path and content on all DataNodes. Different settings are allowed only for different configuration groups.

-

It is recommended to mount external data directories in the /etc/fstab file with the

noatimeoption for better performance, for example:/path/to/data/dir ext3 defaults,noatime 1 1

If you do not set the permissions, the HDFS fails on the next restart with NameNode error, for example: the reported blocks 0 needs additional 478 blocks to reach the threshold 0.9990 of total blocks 479. To fix this error, set the correct permissions for added data directories and restart HDFS. If it does not help, check that all added directories contain consistent data on different DataNodes. They should have the same set of files and directories.