Logging in Spark

By default, Spark logging is enabled and all logs are stored to the /var/log/spark3/apps directory in HDFS. Under this base directory, Spark creates subdirectories with logs for each submitted Spark application.

Spark components like History Server and Livy also log their activity on the local file system under following directories:

-

/var/log/spark3/history — stores History Server logs.

-

/var/log/livy-spark3 — stores Livy logs.

View logs

There are two ways to view Spark logs:

-

As plain text files.

-

Using History Server web UI. In this case, Spark logs must be stored in HDFS so History Server could access all the log data generated by cluster nodes.

Text logs

Spark stores logs as JSON files that consist of individual records called events. To view logs as text, do the following:

-

List the contents of the base log directory (

spark.eventLog.dir).$ sudo -u hdfs hdfs dfs -ls /var/log/spark3/appsThe output lists log files related to corresponding Spark applications:

Found 3 items -rwxrwx--- 3 admin hadoop 57123 2023-08-24 13:32 /var/log/spark3/apps/application_1690315534352_0005 -rw-rw---- 3 admin hadoop 126205 2023-08-24 14:52 /var/log/spark3/apps/application_1690315534352_0006 -rw-rw---- 3 admin hadoop 235626 2023-08-25 09:14 /var/log/spark3/apps/application_1690315534352_0007

-

View a specific log with the following command.

$ sudo -u hdfs hdfs dfs -tail /var/log/spark3/apps/application_1690315534352_0007The log contents looks similar to the following:

... ID":"2","Executor Info":{"Host":"ka-adh-2.ru-central1.internal","Total Cores":1,"Log Urls":{"stdout":"http://ka-adh-2.ru-central1.internal:8042/node/containerlogs/container_e01_1690315534352_0006_01_000003/admin/stdout?start=-4096","stderr":"http://ka-adh-2.ru-central1.internal:8042/node/containerlogs/container_e01_1690315534352_0006_01_000003/admin/stderr?start=-4096"},"Attributes":{"NM_HTTP_ADDRESS":"ka-adh-2.ru-central1.internal:8042","USER":"admin","LOG_FILES":"stderr,stdout","NM_HTTP_PORT":"8042","CLUSTER_ID":"","NM_PORT":"8041","HTTP_SCHEME":"http://","NM_HOST":"ka-adh-2.ru-central1.internal","CONTAINER_ID":"container_e01_1690315534352_0006_01_000003"},"Resources":{},"Resource Profile Id":0}} {"Event":"SparkListenerBlockManagerAdded","Block Manager ID":{"Executor ID":"2","Host":"ka-adh-2.ru-central1.internal","Port":46065},"Maximum Memory":384093388,"Timestamp":1692888721218,"Maximum Onheap Memory":384093388,"Maximum Offheap Memory":0} {"Event":"SparkListenerApplicationEnd","Timestamp":1692888752753}

History Server web UI

The Spark3 History Server component provides a web UI to visualize event logs of completed Spark applications. The web UI is available at http://<history-host>:<history-port> where:

-

<history-host> — the address of the host where the Spark3 History Server component is installed.

-

<history-port> — defaults to

18092. Can be changed with thespark.history.ui.portproperty in ADCM.

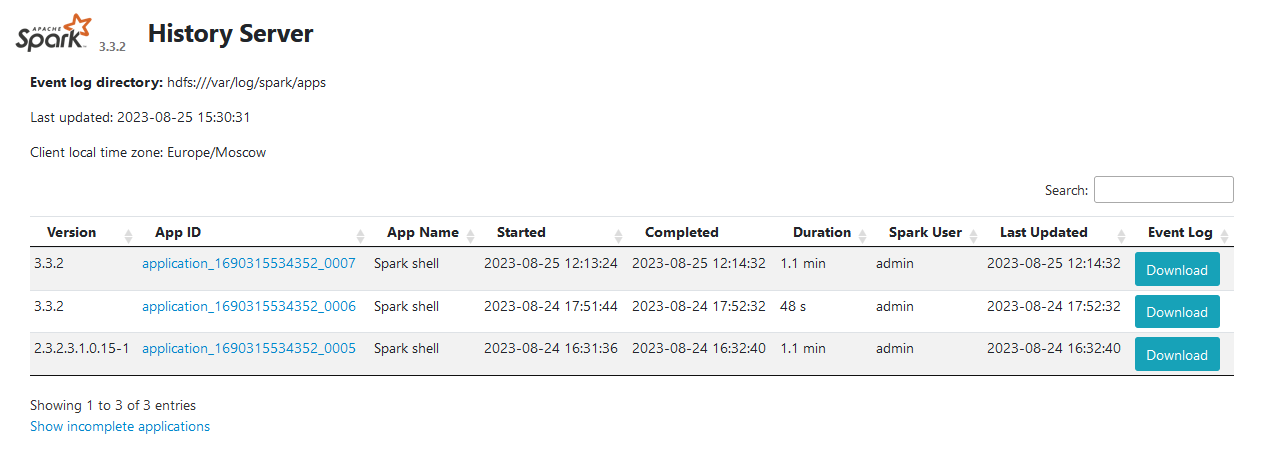

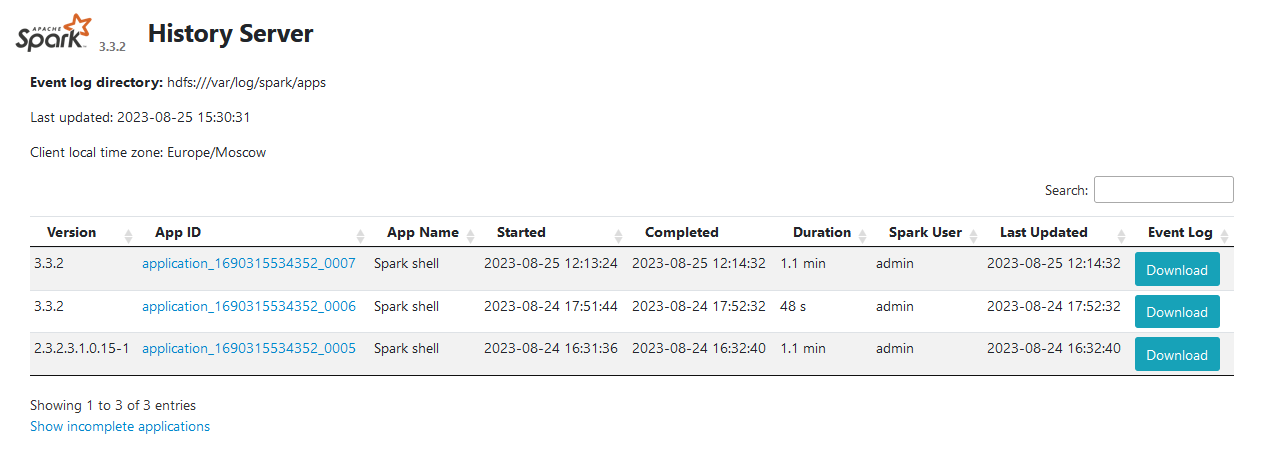

The History Server web UI has the following view.

Spark3 History Server picks up event logs from a directory defined by the spark.history.fs.logDirectory property in ADCM (defaults to hdfs:///var/log/spark/apps).

Typically, the properties spark.history.fs.logDirectory and spark.eventLog.dir should point to the same location to allow History Server to parse all events generated by Spark.

Logging configuration

Under the hood Spark uses the Log4j library for logging.

Technically you can configure Spark logging by editing the log4j.properties file (you can create one based on /etc/spark3/conf.dist/log4j.properties.template).

However, editing log4j.properties is beyond the scope of this article.

Instead, below is a list of ADCM configuration properties used to configure the Spark logging process.

| Property | Description |

|---|---|

spark.eventLog.enabled |

Enables/disables Spark logging |

spark.eventLog.dir |

The base directory to store Spark logs.

This parameter should point to the same directory as |

spark.history.fs.cleaner.enabled |

Defines whether History Server should clean up event logs in the storage |

spark.history.store.path |

Path on the local file system to cache application history data |

spark.history.ui.port |

The port number for Spark History Server web UI |

spark.history.fs.logDirectory |

The directory with Spark event logs to load by History Server |