Configure Kerberos authentication based on MIT Kerberos via ADCM

Overview

To kerberize a cluster using MIT KDC, follow the steps below:

-

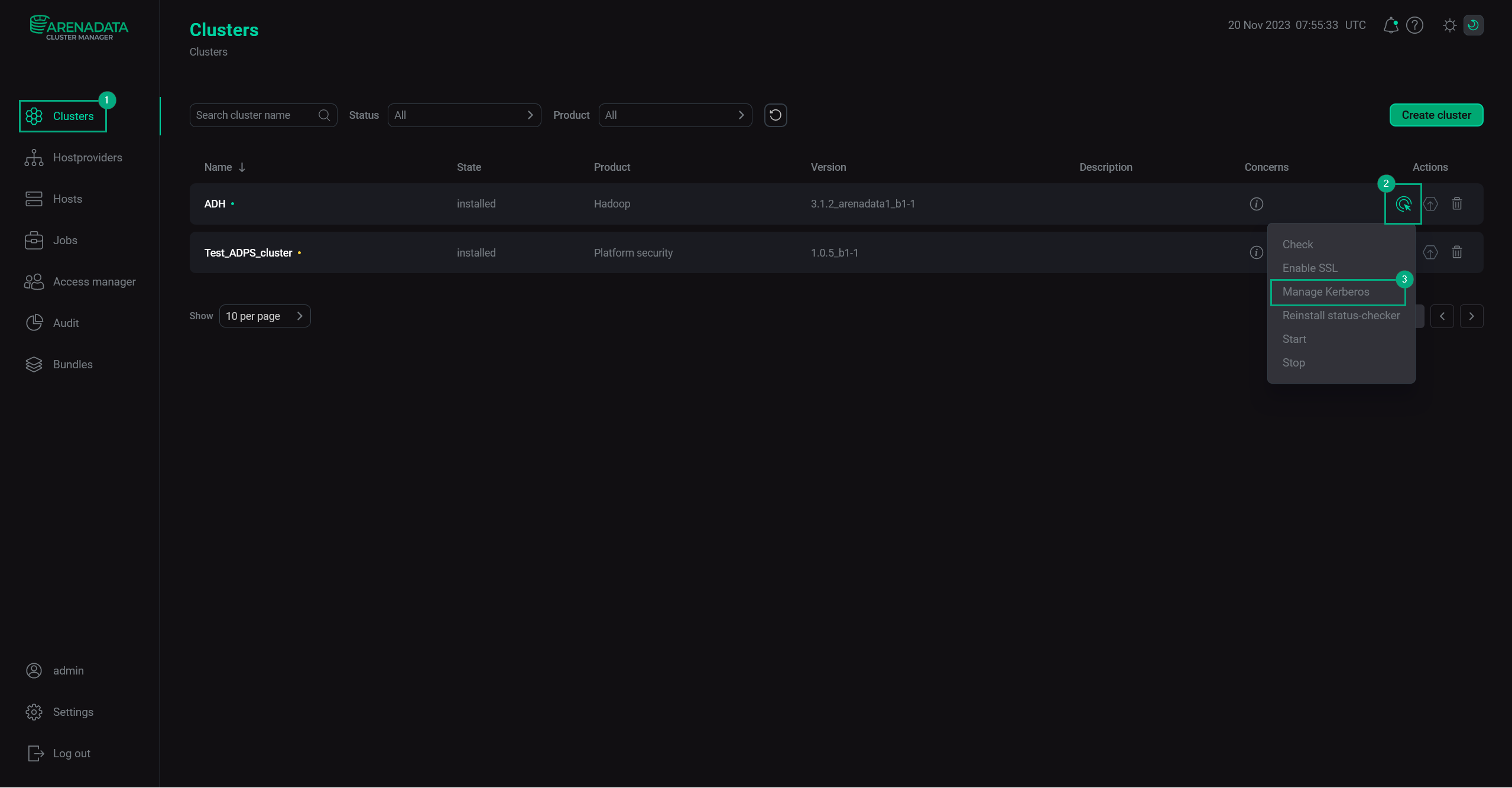

In ADCM web UI, go to the Clusters page. Select an installed and prepared ADH cluster, and run the Manage Kerberos action.

Manage Kerberos

Manage Kerberos -

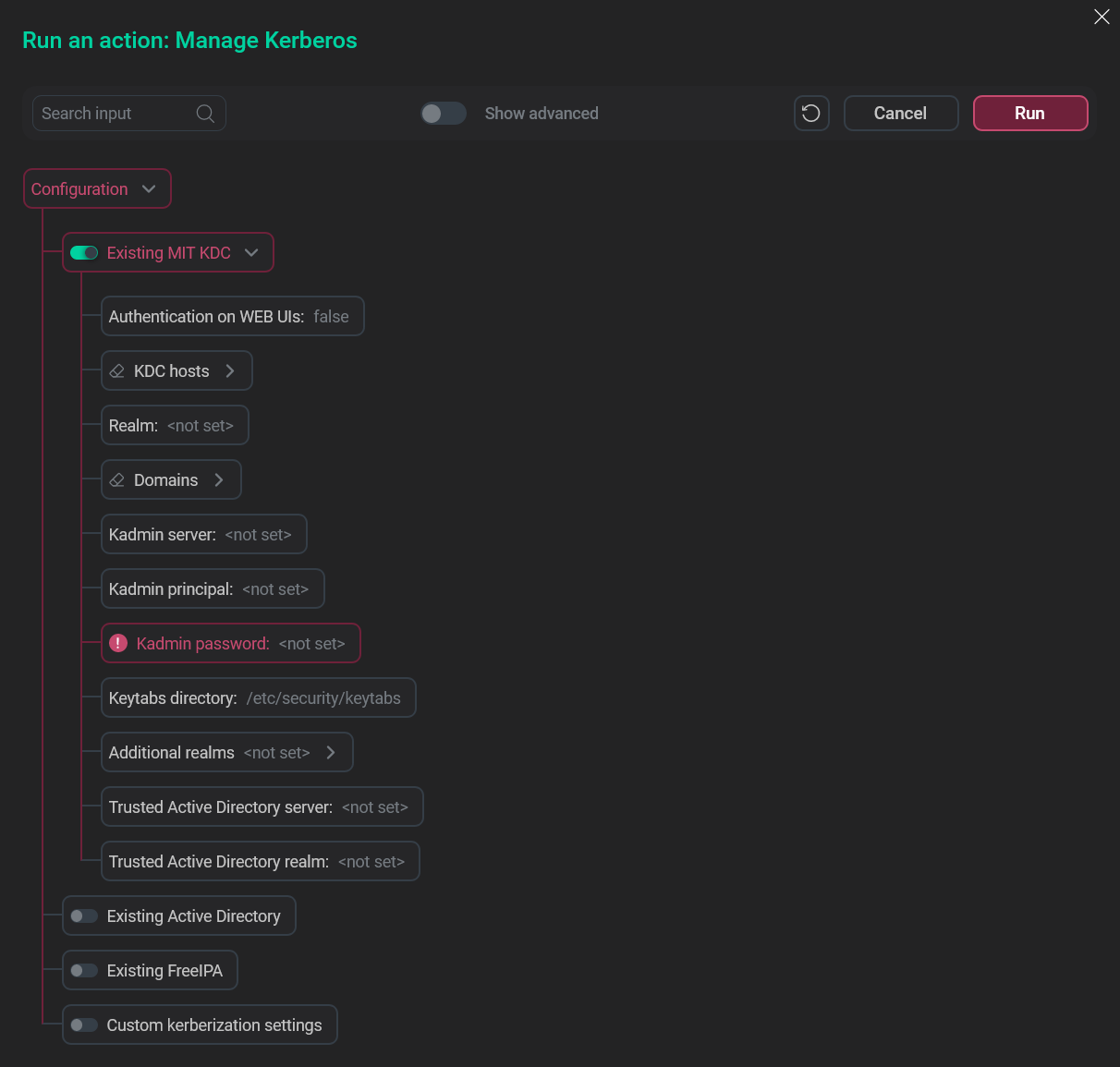

In the pop-up window, turn on the Existing MIT KDC option.

Choose the relevant option

Choose the relevant option -

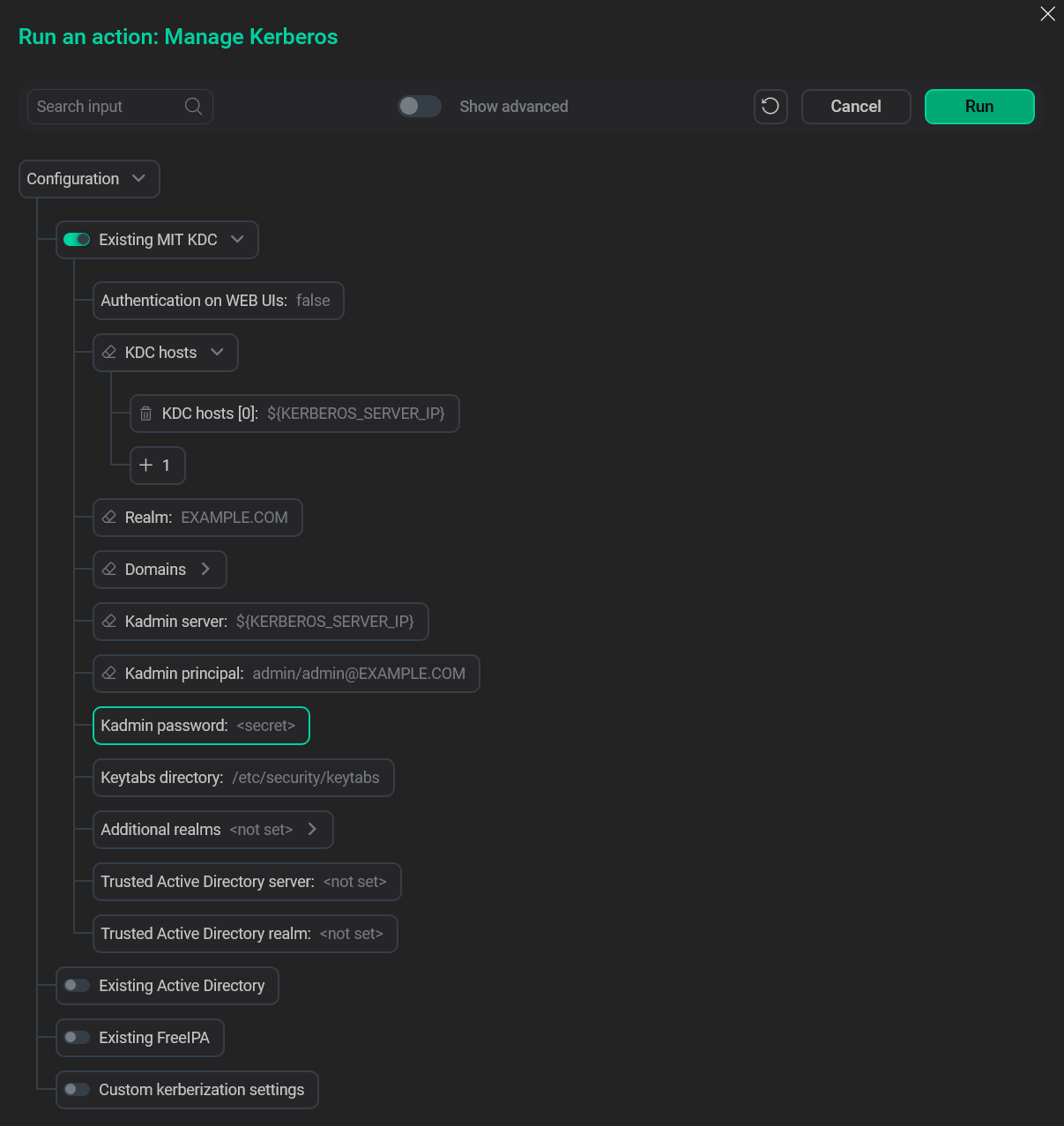

Fill in the prepared MIT Kerberos parameters.

MIT fields

MIT fields -

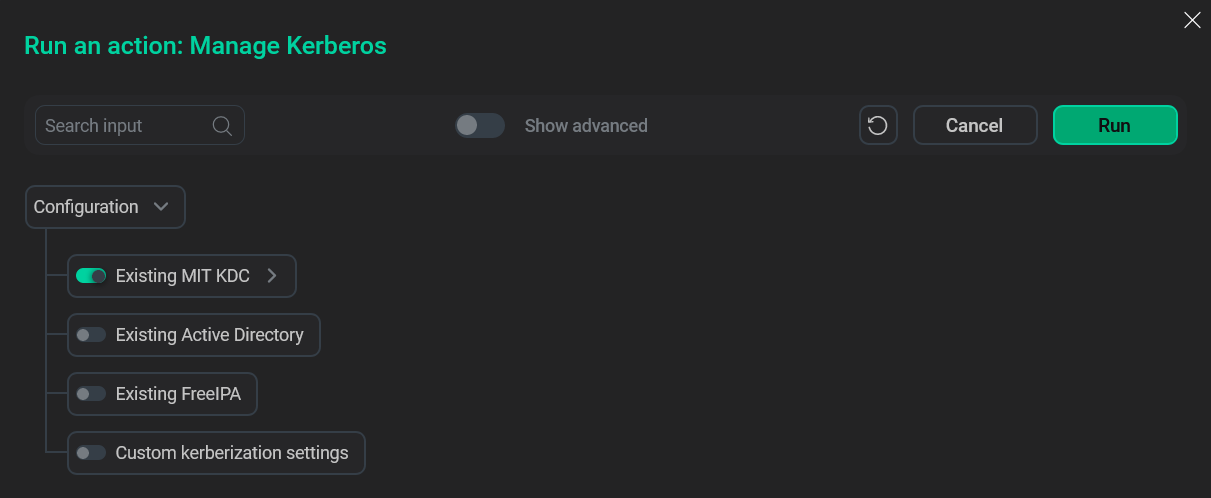

Click Run, wait for the job to complete and proceed to setting up Kerberos in the cluster.

Run the action

Run the action

MIT Kerberos parameters

| Parameter | Description |

|---|---|

Authentication on WEB UIs |

Enable Kerberos authentication on Web UIs |

KDC hosts |

One or more MIT KDC hosts |

Realm |

A Kerberos realm — a network containing KDC hosts and clients |

Domains |

Domains associated with hosts |

Kadmin server |

A host where |

Kadmin principal |

A principal name used to connect via |

Kadmin password |

A principal password used to connect via |

Keytabs directory |

Directory of the keytab file that contains one or several principals along with their keys |

Additional realms |

Additional Kerberos realms |

Trusted Active Directory server |

An Active Directory server for one-way cross-realm trust from the MIT Kerberos KDC |

Trusted Active Directory realm |

An Active Directory realm for one-way cross-realm trust from the MIT Kerberos KDC |

Verify Kerberos installation success using MIT Kerberos

After the cluster is kerberized, any action of any user must run after the successful authentication. You can find the examples of HDFS requests before and after the cluster is kerberized using MIT Kerberos.

Check HDFS before the cluster is kerberized

$ sudo -u hdfs hdfs dfs -touch /tmp/arenadata_test.txt

$ sudo -u hdfs hdfs dfs -ls /tmpThe output looks as follows:

Found 1 items -rw-r--r-- 3 hdfs hadoop 0 2022-02-05 11:17 /tmp/arenadata_test.txt

The curl request to create an entity:

$ curl -I -H "Content-Type:application/octet-stream" -X PUT 'http://httpfs_hostname:14000/webhdfs/v1/tmp/arenadata_httpfs_test.txt?op=CREATE&data=true&user.name=hdfs'The response looks like the following:

HTTP/1.1 201 Created Date: Sat, 05 Feb 2022 11:23:31 GMT Cache-Control: no-cache Expires: Sat, 05 Feb 2022 11:23:31 GMT Date: Sat, 05 Feb 2022 11:23:31 GMT Pragma: no-cache Set-Cookie: hadoop.auth="u=hdfs&p=hdfs&t=simple-dt&e=1628198611064&s=gS6tylp5MZw+aiHs1EzuNfd1qqbJpFAGeGLxTtXxZfg="; Path=/; HttpOnly Content-Type: application/json;charset=utf-8 Content-Length: 0

The curl request to retrieve data about an HDFS file:

$ curl -H "Content-Type:application/json" -X GET 'http://httpfs_hostname:14000/webhdfs/v1/tmp?op=LISTSTATUS&user.name=hdfs'The response JSON looks as follows:

{"FileStatuses":{"FileStatus":[{"pathSuffix":"arenadata_httpfs_test.txt","type":"FILE","length":0,"owner":"hdfs","group":"hadoop","permission":"755","accessTime":1628162611089,"modificationTime":1628162611113,"blockSize":134217728,"replication":3}]}}

Check HDFS after the cluster is kerberized

HDFS request:

$ sudo -u hdfs hdfs dfs -ls /tmpResponse (unsuccessful):

2022-02-05 11:34:14,753 WARN ipc.Client: Exception encountered while connecting to the server : org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS] 2022-02-05 11:34:14,765 WARN ipc.Client: Exception encountered while connecting to the server : org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS] 2022-02-05 11:34:14,767 INFO retry.RetryInvocationHandler: java.io.IOException: DestHost:destPort namenode_host:8020 , LocalHost:localPort namenode_host/namenode_ip:0. Failed on local exception: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS], while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over namenode_host/namenode_ip:8020 after 1 failover attempts. Trying to failover after sleeping for 1087ms.

Creating a ticket:

$ sudo -u hdfs kinit -k -t /etc/security/keytabs/hdfs.service.keytab hdfs/node_hostname@REALMResponse (successful):

Found 1 items -rw-r--r-- 3 hdfs hadoop 0 2022-02-05 11:17 /tmp/arenadata_test.txt

HDFS request:

$ sudo -u hdfs curl -H "Content-Type:application/json" -X GET 'http://httpfs_hostname:14000/webhdfs/v1/tmp?op=LISTSTATUS&user.name=hdfs'Response (unsuccessful):

<html> <head> <meta http-equiv="Content-Type" content="text/html;charset=utf-8"/> <title>Error 401 Authentication required</title> </head> <body><h2>HTTP ERROR 401</h2> <p>Problem accessing /webhdfs/v1/tmp. Reason: <pre> Authentication required</pre></p> </body> </html>

Creating a ticket:

$ sudo -u hdfs kinit -k -t /etc/security/keytabs/HTTP.service.keytab HTTP/node_hostname@REALMHDFS request:

$ sudo -u hdfs curl -sfSIL -I -H "Content-Type:application/octet-stream" -X PUT --negotiate -u : 'http://httpfs_hostname:14000/webhdfs/v1/tmp/arenadata_httpfs_test.txt?op=CREATE&data=true&user.name=hdfs'Response (successful):

HTTP/1.1 201 Created Date: Sat, 05 Feb 2022 11:23:31 GMT Cache-Control: no-cache Expires: Sat, 05 Feb 2022 11:23:31 GMT Date: Sat, 05 Feb 2022 11:23:31 GMT Pragma: no-cache Set-Cookie: hadoop.auth="u=hdfs&p=hdfs&t=simple-dt&e=1628198611064&s=gS6tylp5MZw+aiHs1EzuNfd1qqbJpFAGeGLxTtXxZfg="; Path=/; HttpOnly Content-Type: application/json;charset=utf-8 Content-Length: 0