SSM rule usage examples

Cache files

If you want to move frequently accessed files to cache, you can use the rule like below:

file: accessCount(1min) > 0 and path matches "/user/sergei/demoCacheFile/*" | cacheWith this rule active, SSM caches all files in the demoCacheFile directory which were accessed at least once during the last minute.

For test purposes, several files were created in the user/sergei/demoCacheFile directory in HDFS. The command below prints out the filenames:

list -file /user/sergei/demoCacheFileAction starts at Wed Feb 14 14:05:59 UTC 2024 : List /user/sergei/demoCacheFile -rw-r--r-- 3 sergei sergei 10 2024-02-14 14:04 /user/sergei/demoCacheFile/f1.txt -rw-r--r-- 3 sergei sergei 13 2024-02-14 14:04 /user/sergei/demoCacheFile/f2.txt -rw-r--r-- 3 sergei sergei 12 2024-02-14 14:04 /user/sergei/demoCacheFile/f3.txt

To check that the example works, try accessing one file by running the read action:

read -file /user/sergei/demoCacheFile/f1.txtIf there are no errors, you should be able to see that file on the Cluster Info→ Files in cache page.

Move data between cold storage and hot storage

|

IMPORTANT

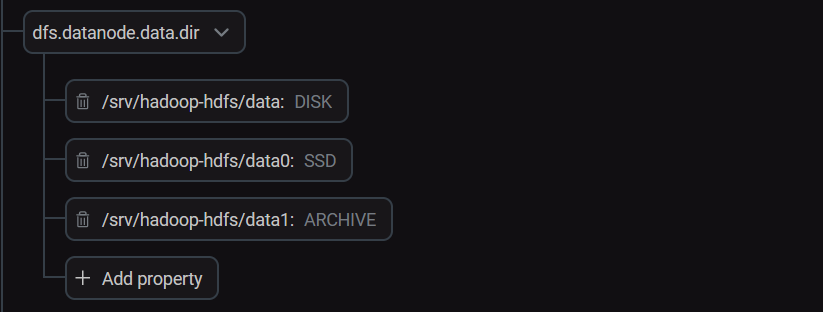

In order for this example to work, make sure you have configured the SSD and ARCHIVE storage types in HDFS.

|

To move files from cold storage to hot storage, use the rule like below:

file: accessCount(1min) > 0 and path matches "/user/sergei/demoCold2Hot/*" | allssdTo check the storage type, call the checkstorage action in the Submit action pane:

checkstorage -file /user/sergei/demoCold2Hot/f1.txtBefore the rule application, the command output is:

File offset = 0, Block locations = {10.92.41.28:9866[DISK] 10.92.41.202:9866[DISK] 10.92.41.33:9866[DISK] }

After triggering the condition by reading the file, the rule got applied and the output became:

File offset = 0, Block locations = {10.92.41.33:9866[SSD] 10.92.41.28:9866[SSD] 10.92.41.202:9866[SSD] }

Now, you can also see the file on the Cluster Info → Hottest files in last hour page.

To move files from the hot storage to cold storage, use the rule below:

file : age > 3min and path matches "/user/sergei/demoHot2Cold/*" | archiveSync data

To synchronize files in a directory with another HDFS cluster, use the rule like below:

file: path matches "/user/sergei/demoSyncSrc/*" | sync -dest hdfs://stikhomirov-adh1.ru-central1.internal/demoSyncDestIf the SSM cluster has a special namespace configured, you can substitute the namespace name for a host name in the command above.

|

NOTE

In order for the sync command to work, at least one server (source or destination) needs to have SSM installed. Also, the source directory doesn’t have to be local, just as the destination doesn’t have to be on the remote server.

|

To check the correctness, compare the checksums. You can call the checksum command in the Submit action pane:

checksum -file /user/sergei/demoSyncSrc/f1.txtThe output for this particular file is:

/user/sergei/demoSyncSrc/f1.txt MD5-of-0MD5-of-512CRC32C 00000200000000000000000049c19b14a1077d0a6fc95bee2db8914c

To check the checksum on the destination server, call the following command there:

$ hadoop fs -checksum /demoSyncDest/f1.txtThe checksum matches the one above:

/demoSyncDest/f1.txt MD5-of-0MD5-of-512CRC32C 00000200000000000000000049c19b14a1077d0a6fc95bee2db8914c