Use Kerberos with MS Active Directory in Kafka

This article describes the first steps in Kafka after installing Kerberos with MS Active Directory.

|

Verify installed Kerberos

-

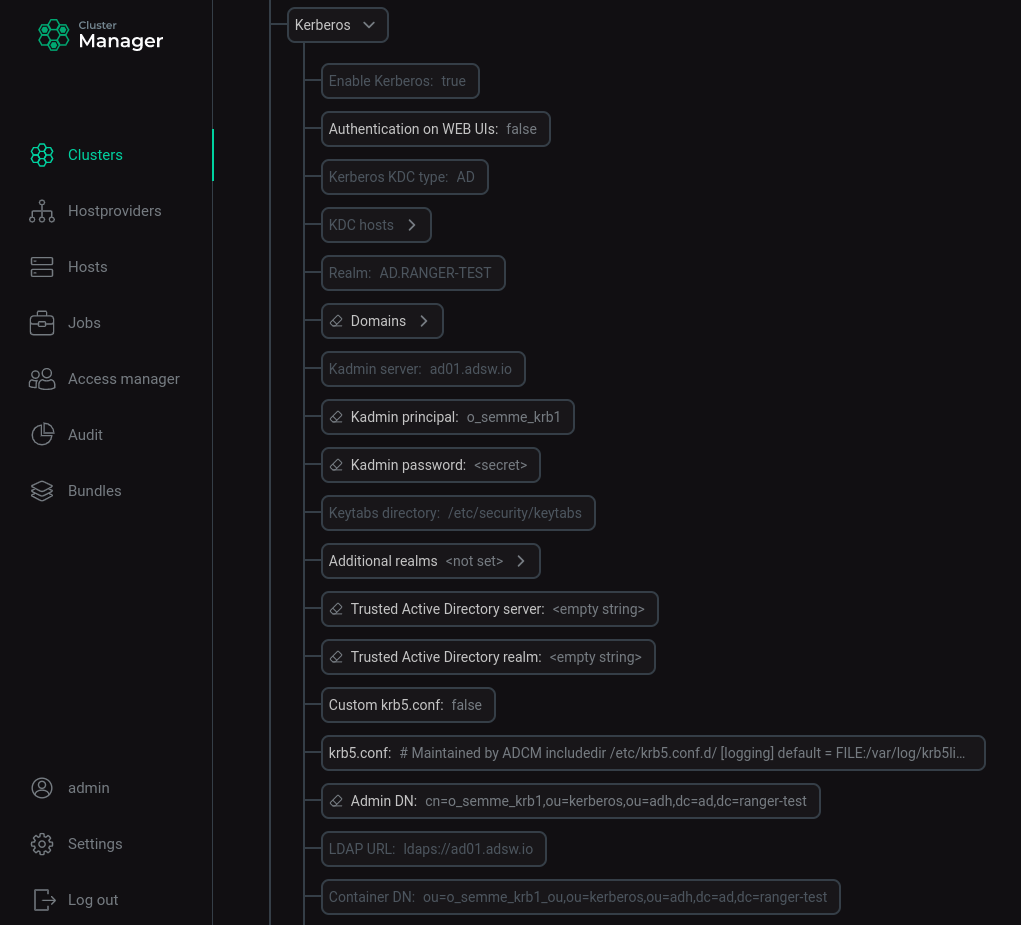

Check the settings set for Kerberos in the cluster configurations.

Go to the cluster configuration, select Show advanced, find the section with Kerberos configurations, expand it.

Installed ADS cluster configuration settings for Kerberos

Installed ADS cluster configuration settings for Kerberos -

Check the security and authentication configuration of the Kafka brokers.

On each host with a Kafka broker, issue the command:

$ sudo vim /usr/lib/kafka/config/server.propertiesEnsure that in the server.properties file for each Kafka broker, the lines defining the security protocol are changed to

SASL_PLAINTEXT; lines defining the authentication mechanism - toGSSAPI.security.inter.broker.protocol=SASL_PLAINTEXT sasl.mechanism.inter.broker.protocol=GSSAPI sasl.enabled.mechanisms=GSSAPI

-

Verify that after installing Kerberos SASL in the Kafka service settings in the server.properties group, the listeners parameter has changed from

PLAINTEXT://:9092toSASL_PLAINTEXT://:9092. -

Using the command line utility to search the LDAP directory

ldapsearchfrom the Kafka broker host, it is possible to test the generated principals in theAD.RANGER-TESTenvironment at a given Container DN.Enter the command, specifying the

Admin DN,Container DNand user password specified when starting LDAP on ADCM:$ ldapsearch -v -H ldaps://ldap_host -x -D "cn=admin,ou=kerberos,ou=adh,dc=ad,dc=ranger-test" -b "ou=admin_ou,ou=kerberos,ou =adh,dc=ad,dc=ranger-test" -w PasswordAs a result, information is displayed for each created account in LDAP for each instance of each ADS service. Withdrawal option for one account:

# kafka/sov-ads-test-5.ru-central1.internal, admin_ou, kerberos, adh, ad.ranger-test dn: CN=kafka/sov-ads-test-5.ru-central1.internal,OU=admin_ou,OU=kerberos,OU=adh,DC=ad,DC=ranger-test objectClass: top objectClass: person objectClass: organizationalPerson objectClass: user cn: kafka/sov-ads-test-5.ru-central1.internal distinguishedName: CN=kafka/sov-ads-test-5.ru-central1.internal,OU=admin_ou,OU=kerberos,OU=adh,DC=ad,DC=ranger-test instanceType: 4 whenCreated: 20220816100038.0Z whenChanged: 20220816100042.0Z uSNCreated: 14950769 uSNChanged: 14950786 name: kafka/sov-ads-test-5.ru-central1.internal objectGUID:: S/7BU9kZcE6pOwqxKUDJRQ== userAccountControl: 66048 badPwdCount: 0 codePage: 0 countryCode: 0 badPasswordTime: 0 lastLogoff: 0 lastLogon: 133053546143620502 pwdLastSet: 133051176384557312 primaryGroupID: 513 objectSid:: AQUAAAAAAAUVAAAAKcXXWJ6nBwdKJbrR0v8QAA== accountExpires: 0 logonCount: 50 sAMAccountName: $IUV110-ESD8M6LCFJGL sAMAccountType: 805306368 userPrincipalName: kafka/sov-ads-test-5.ru-central1.internal@AD.RANGER-TEST servicePrincipalName: kafka/sov-ads-test-5.ru-central1.internal objectCategory: CN=Person,CN=Schema,CN=Configuration,DC=ad,DC=ranger-test dSCorePropagationData: 16010101000000.0Z lastLogonTimestamp: 133051176422839302

NOTEFor a complete description of theldapsearchcommand-line utility features and applicable options, see ldapsearch. The syntax of theldapsearchcommand may differ depending on the OS used. -

Check for the presence on hosts with installed services of files for storing passwords *.service.keytab.

On each host with installed services, issue the command:

$ ls -la /etc/security/keytabs/The file listing shows that *.service.keytab files have been created for each service installed on the host:

total 16 drwxr-xr-x. 2 root root 102 Aug 9 20:55 . drwxr-xr-x. 7 root root 4096 Aug 9 19:38 .. -rw-------. 1 kafka kafka 826 Aug 9 20:54 kafka.service.keytab -rw-------. 1 zookeeper zookeeper 858 Aug 9 20:55 zookeeper.service.keytab

Client connection to Kafka

By default, every user in the Active Directory database that has a principal in a given realm has rights to connect to the Kafka cluster and perform actions on topics.

Create a JAAS file for a user

A JAAS file (Java Authentication and Authorization Service) must be created for all principals. It specifies how tickets for a particular principal will be used.

|

NOTE

|

For broker principals, сonnection parameters are automatically created in the kafka-jaas.conf file after kerberization. To view the contents of the file, enter the following command:

$ sudo vim /usr/lib/kafka/config/kafka-jaas.confFor client principals, you need to create the JAAS file yourself.

-

Run command:

$ sudo vim /tmp/client.jaas -

Write data to the file:

KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useTicketCache=true; };where

useTicketCacheis a parameter specifying whether a ticket for this user will be obtained from the ticket cache. If you set this parameter totrue, you must create a user ticket before connecting to Kafka.

Create a configuration file .properties for the user

To create a configuration file .properties for the user, run the command:

$ sudo vim /tmp/client.propertiesFill the file with data:

security.protocol=SASL_PLAINTEXT

sasl.mechanism=GSSAPI

sasl.kerberos.service.name=kafkaConnect a user to Kafka (create tickets) and work with .sh files (scripts)

-

Open a terminal session and connect to one of the Kafka brokers.

-

Create a ticket for a user by entering a password:

$ kinit -p admin-kafka@AD.RANGER-TESTPassword for admin-kafka@AD.RANGER-TEST:

NOTE-

In this example,

admin-kafkais a user with an entry in the Active Directory database and a principal for theAD.RANGER-TESTrealm. -

For a complete description of the kinit command functions and applicable options, see kinit.

-

-

Check ticket:

$ klistTicket cache: FILE:/tmp/krb5cc_1000 Default principal: admin-kafka@AD.RANGER-TEST Valid starting Expires Service principal 08/19/2022 14:18:33 08/20/2022 00:18:33 krbtgt/AD.RANGER-TEST@AD.RANGER-TEST renew until 08/20/2022 14:17:35

-

Export the generated client.jaas file as a JVM option for the given user using the

KAFKA_OPTSenvironment variable:$ export KAFKA_OPTS="-Djava.security.auth.login.config=/tmp/client.jaas" -

Create a topic by specifying the path to the created client.properties file:

$ /usr/lib/kafka/bin/kafka-topics.sh --create --topic test-topic --bootstrap-server sov-ads-test-1.ru-central1.internal:9092,sov-ads-test-2.ru-central1.internal:9092,sov-ads-test-3.ru-central1.internal:9092 --command-config /tmp/client.propertiesGet a confirmation:

Created topic test-topic.

-

Write a message to the topic, specifying the path to the created client.properties file:

$ /usr/lib/kafka/bin/kafka-console-producer.sh --topic test-topic --bootstrap-server sov-ads-test-1.ru-central1.internal:9092,sov-ads-test-2.ru-central1.internal:9092,sov-ads-test-3.ru-central1.internal:9092 --producer.config /tmp/client.properties>One >Two >Three >Four >Five

-

Open terminal session 2 and connect to one of the Kafka brokers.

-

Create a ticket for user

reader:$ kinit -p reader@ADS-KAFKA.LOCALEnter the password specified when creating the user.

-

Check ticket:

$ klistTicket cache: FILE:/tmp/krb5cc_1000 Default principal: reader@ADS-KAFKA.LOCAL Valid starting Expires Service principal 08/10/2022 21:30:47 08/11/2022 21:30:47 krbtgt/ADS-KAFKA.LOCAL@ADS-KAFKA.LOCAL

-

Export the generated client.jaas file as a JVM option for the given user using the

KAFKA_OPTSenvironment variable:$ export KAFKA_OPTS="-Djava.security.auth.login.config=/tmp/client.jaas" -

Read messages from a topic by specifying the path to the created client.properties file:

$ /usr/lib/kafka/bin/kafka-console-consumer.sh --topic test-topic --from-beginning --bootstrap-server sov-ads-test-1.ru-central1.internal:9092,sov-ads-test-2.ru-central1.internal:9092,sov-ads-test-3.ru-central1.internal:9092 --consumer.config /tmp/client.propertiesMessages received:

One Two Three Four Five

Verify that the received messages are correct.