Configure data storages

ADQM can store table data on multiple disks of various types, group them into volumes, and automatically move data between volumes according to the specified conditions.

Overview

ADQM supports the following types of storages for table data:

-

Local file system. It is possible to store data on multiple block devices — for example, it can be useful when table data is divided into "hot" (the most recent data that is regularly queried and requires a small amount of space) and "cold" (historical data that is queried rarely). In this case, the "hot" data can be located on fast local disks (for example, on NVMe SSD disks or in memory), while the "cold" data — on slower ones (for example, HDD). The ability to add new devices also simplifies the storage extension process.

-

External S3 disks. This storage option provides the ability to store huge amounts of information without worrying about the fault tolerance, reliability, and scalability of the storage.

It is also possible to create cache over disks that store data (to increase the speed of fetching data from external disks with high latency) and encrypted disks (to encrypt sensitive data of the ADQM cluster).

Basic concepts used when configuring a data storage in ADQM:

-

Disk — additional block device mounted on the file system (local disk), external S3 storage, cache or encrypted disk.

-

Volume — ordered set of equal disks.

-

Storage policy — set of volumes and the specified rules to write table data.

General scheme of work with storages in ADQM:

-

Create a storage policy that specifies disks to store data.

To configure disks, volumes, and storage policies, use configuration parameters of the ADQMDB service (see the corresponding sections below). Specified parameter values are stored within the <storage_configuration> tag in the /etc/clickhouse-server/config.d/storage.xml file.

Disks

To manage disks in ADQM, use the ADCM interface:

-

Open the ADQMDB service’s configuration page (ADQM cluster → Services → ADQMDB → Primary Configuration).

-

Enable the Show advanced option.

-

Activate a group that corresponds to a particular disk type:

-

Enable additional local storage — local disks;

-

Enable S3 storage — S3 disks;

-

Enable cache — cache disks;

-

Enable encrypted storage — encrypted disks.

-

-

In the expanded section, you can add/remove and configure disks of the corresponding type. Parameters available for each disk type are described below.

Local disksParameter Description disk_name

Disk name (should be unique)

disk_path

Absolute path to the directory where the block device is mounted. When specifying a relative path (without the initial

/), an error occurs informing that the directory does not existkeep_free_space_bytes

Amount of free disk space to be reserved (in bytes)

If the Recursively create directories option is enabled, ADQM will automatically create the specified directories for local disks (if these directories do not exist) and assign the

clickhouse:clickhouseowner with the0770permission mask to them.S3 disksParameter Description disk_name

Disk name (should be unique)

endpoint

Bucket URL with the path to the root directory on the server where the data is stored

access_key_id

S3 access key id

secret_access_key

S3 access secret key

proxy

List of proxy servers

custom_parameters

Other parameters (for a full list of parameters available for S3 disks, see Configuring the S3 disk in the ClickHouse documentation)

Cache disksParameter Description parent_disk_name

Name of the parent disk (external S3 disk) over which the cache is created

cache_disk_name

Cache disk name (should be unique)

cache_disk_path

Absolute path to the directory to which the cache will be written

max_size_bytes

Maximum size of the cache (in bytes)

cache_on_write_operations

Enables caching data on any write operations (

INSERTqueries, background merges)enable_filesystem_query_cache_limit

Allows limiting the size of the cache which is downloaded within each query (depends on the

max_query_cache_sizeuser setting)enable_cache_hits_threshold

Number that defines how many times data needs to be read before it is cached

do_not_evict_index_and_mark_files

Allows you not to evict small frequently used files according to the cache policy

max_file_segment_size_bytes

Maximum size of a cache file (in bytes)

max_elements

Limit for a number of cache files

If the Recursively create directories option is enabled, ADQM will automatically create the specified directories for storing cache (if these directories do not exist) and assign the

clickhouse:clickhouseowner with the0770permission mask to them.Encrypted disksParameter Description parent_disk_name

Name of the parent disk over which the encrypted file is created

encrypted_disk_name

Encrypted disk name (should be unique)

encrypted_disk_path

Absolute or relative path to the directory on the disk where encrypted data will be saved. When specifying a relative path, a subdirectory is created on the parent disk

encryption_algorithm

Data encryption algorithm. Possible values:

AES_128_CTR,AES_192_CTR,AES_256_CTRencryption_key_hex

Key as a hexadecimal value used for encryption (for example,

00112233445566778899AABBCCDDEEFF)If the Recursively create directories option is enabled, ADQM will automatically create the specified directories for storing encrypted data (if these directories do not exist) and assign the

clickhouse:clickhouseowner with the0770permission mask to them.You can use the Enable… switch to temporarily disable a group of disks by the type, but keep in mind that a storage policy cannot refer to an unconfigured disk.

-

Click Save and execute the Reconfig action for the ADQMDB service to save the information about the added disks in the configuration (to modify existing disks, it is also required to restart the service — to do this, enable the Restart option in the Reconfig action configuration window).

To view information on disks configured in ADQM, you can use the system.disks system table:

SELECT

name,

path,

formatReadableSize(free_space) AS free,

formatReadableSize(total_space) AS total,

formatReadableSize(keep_free_space) AS reserved

FROM system.disks;When installing ADQM, one local disk (default) is configured by default. Data is saved to the directory specified via the Data path parameter in the Directories section on the ADQMDB service’s configuration page (default is /var/lib/clickhouse/).

┌─name────┬─path─────────────────┬─free──────┬─total─────┬─reserved─┐ │ default │ /var/lib/clickhouse/ │ 39.33 GiB │ 49.99 GiB │ 0.00 B │ └─────────┴──────────────────────┴───────────┴───────────┴──────────┘

Storage policies

A storage policy groups disks into one or more volumes, defines rules to write table data on disks, and specifies how data should be moved between volumes or individual disks within volumes.

You can add and configure storage policies in the ADCM interface:

-

Go to the ADQMDB service’s configuration page (ADQM cluster → Services → ADQMDB → Primary Configuration).

-

Enable the Show advanced option.

-

In the Storage policies section, add required storage policies to the Policies list.

Storage policy settings Parameter Description policy_name

Storage policy name (should be unique)

move_factor

Proportion of the free space to be available on a volume. When the amount of available space becomes less than the specified factor, data will automatically start moving to the next volume (if any). To move data parts, ADQM sorts existing data parts by size in the descending order and selects parts whose total size is sufficient to satisfy the

move_factorcondition. If the total size of all parts is insufficient, all parts will be movedvolumes

List of policy volumes (a policy should contain at least one volume). Volume configuration parameters are:

-

volume_name — volume name (should be unique).

-

max_data_part_size_bytes — maximum size of a data part that can be stored on any disk of the volume. If a merged part size is expected to be larger than the

max_data_part_size_bytesvalue, this part will be written to the next volume. Basically this feature allows you to keep small data parts (new data) on a "hot" volume (SSD) and move them to a "cold" volume (HDD) when they become large (see the example below). Do not use this option if a policy has only one volume. -

prefer_not_to_merge — disables merging data parts stored on the volume. This allows you to control how ADQM works with slow disks.

-

volume_disks — names of disks to be grouped into the volume (a volume should contain at least one disk). If a cache disk is created over a disk with data, then the cache disk should be specified for a volume.

The order of volumes in the storage policy is important. Once a volume is overfilled, data is migrated to the next one. The order of disks in a volumes is also important — data is written to disks in turn.

-

-

Click Save and execute the Reconfig action for the ADQMDB service to save the information about the added storage policies in the configuration (to modify existing storage policies, it is also required to restart the service — to do this, enable the Restart option in the Reconfig action configuration window).

You can get information on storage policies configured in ADQM from the system.storage_policies system table:

SELECT policy_name, volume_name, disks FROM system.storage_policies;By default, ADQM contains a single storage policy (default) with a single volume, which specifies that all table data is written to the default disk:

┌─policy_name─┬─volume_name─┬─disks───────┐ │ default │ default │ ['default'] │ └─────────────┴─────────────┴─────────────┘

Assign storage policy to table

After storage policies are configured, you can assign them to tables. To do this, specify a storage policy name as the storage_policy parameter value using the SETTINGS clause when creating a table. The basic syntax of a query to create a MergeTree table with a specified storage policy can be written as follows:

CREATE TABLE <table_name> (<column_definitions>)

ENGINE = MergeTree

ORDER BY <sorting_key>

SETTINGS storage_policy = '<policy_name>';By default, a table uses the default storage policy.

You can see which storage policy is assigned to a table and where the table data is stored in the storage_policy and data_paths fields of the system.tables system table. For example, run the following queries to create a table without specifying a storage policy, populate it with test data, and display information about the assigned storage policy and directory where data is written:

CREATE TABLE test_table (id Int32) Engine=MergeTree ORDER BY id;INSERT INTO test_table SELECT rand() FROM numbers(10000000);SELECT name, storage_policy, data_paths FROM system.tables WHERE name = 'test_table' FORMAT Vertical;Row 1: ────── name: test_table storage_policy: default data_paths: ['/var/lib/clickhouse/store/50d/50d13b59-89d8-44ef-87f6-08ee8f71066c/']

To change the storage policy after the table has been created, use the ALTER TABLE … MODIFY SETTING query. Note that a new policy should contain all volumes and disks of the previous policy with the same names.

Example of tiered storage configuration

In this example, two block devices of different types are used for data storage — SSD and HDD disks mounted on the following directories:

-

/mnt/ssd/adqm/

-

/mnt/hdd/adqm/

Each directory is assigned the clickhouse owner:

$ sudo chown clickhouse:clickhouse -R /mnt/ssd/adqm/ /mnt/hdd/adqm/-

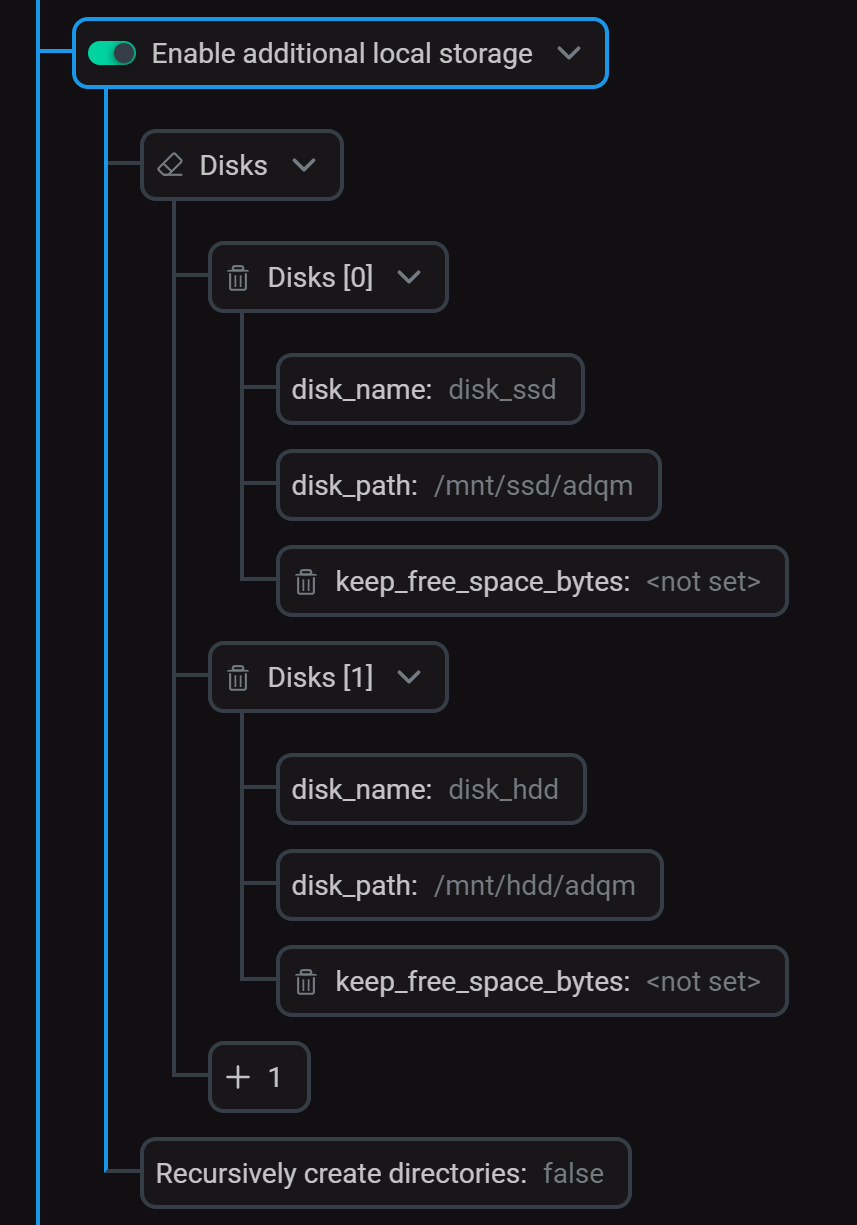

Specify local disks in the Enable additional local storage section (previously enabled) of the ADQMDB service’s configuration page.

Configure local disks

Configure local disks -

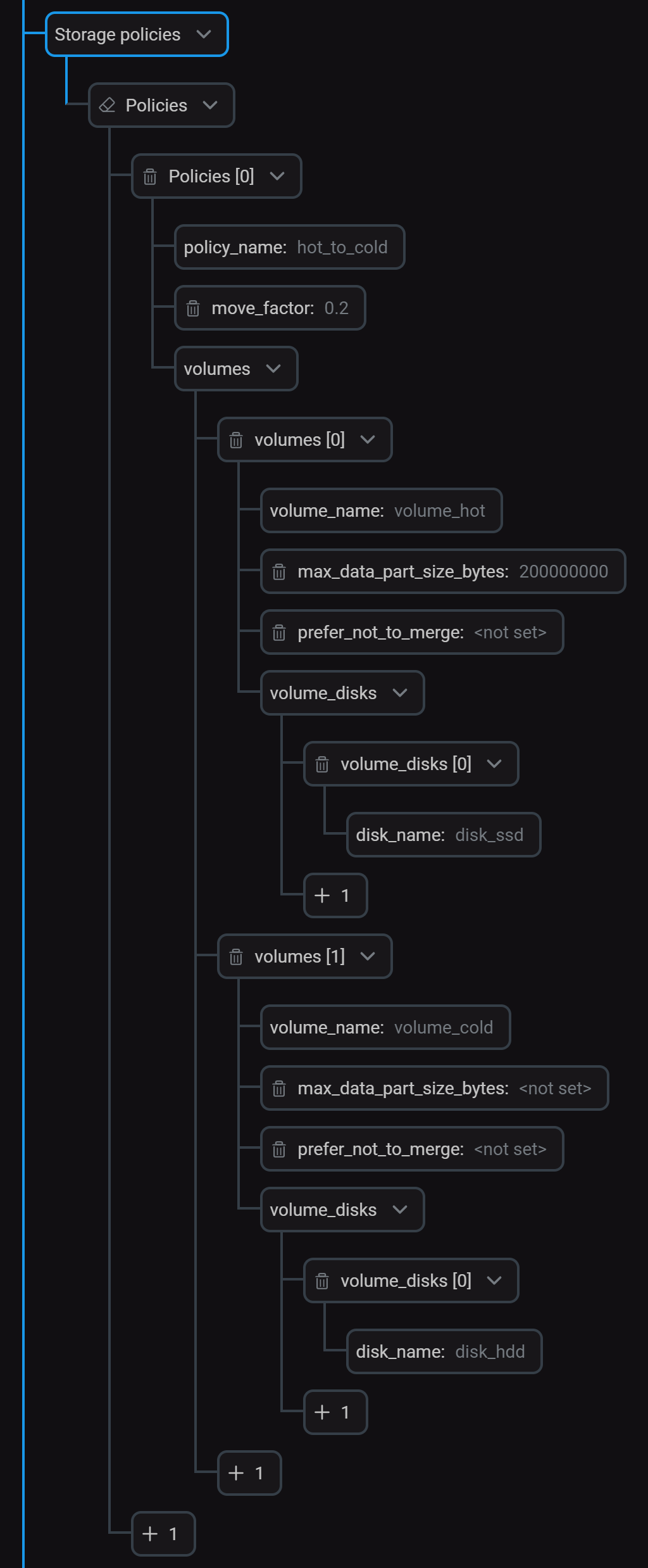

In the Storage policies section, create a policy (

hot_to_cold) that includes two volumes for storing "hot" and "cold" data. Configure storage policy

Configure storage policyThe

volume_hotvolume contains one SSD disk (disk_ssd). The maximum size of a data part that can be stored on this volume is 200 MB. All data parts with the size larger than 200 MB will be moved to thevolume_coldvolume, which contains one HDD disk (disk_hdd). The MergeTree table engine constantly performs background data merges, merging newly inserted small data parts into larger parts over time. That is, large data parts appear after several merges, respectively, we can assume that the larger the part, the older it is.Also, once the

disk_ssddisk is more than 80% full, data will be transferred to thedisk_hdddisk in the background. -

Click Save and run the Reconfig action for the service to apply the changes.

After that, information about disks and policies will appear in the appropriate tags inside the

<storage_configuration>section in the /etc/clickhouse-server/config.d/storage.xml file.<storage_configuration> <disks> <disk_ssd> <type>local</type> <path>/mnt/ssd/adqm/</path> </disk_ssd> <disk_hdd> <type>local</type> <path>/mnt/hdd/adqm/</path> </disk_hdd> </disks> <policies> <hot_to_cold> <volumes> <volume_hot> <disk>disk_ssd</disk> <max_data_part_size_bytes>200000000</max_data_part_size_bytes> </volume_hot> <volume_cold> <disk>disk_hdd</disk> </volume_cold> </volumes> <move_factor>0.2</move_factor> </hot_to_cold> </policies> </storage_configuration> -

Create a table that uses the new storage policy:

CREATE TABLE test_table1 (id Int32) Engine=MergeTree ORDER BY id SETTINGS storage_policy = 'hot_to_cold';Populate the table with test data by repeating the following query for several times:

INSERT INTO test_table1 SELECT rand() FROM numbers(10000000); -

Wait a bit and run the following query to see how the table data is distributed across disks:

SELECT disk_name, formatReadableSize(bytes_on_disk) AS size FROM system.parts WHERE (table = 'test_table1') AND active;As a result of merges, a large data part has been created and then was moved to the "cold" disk.

┌─disk_name─┬─size───────┐ │ disk_hdd │ 530.49 MiB │ │ disk_ssd │ 22.26 MiB │ │ disk_ssd │ 22.26 MiB │ └───────────┴────────────┘