ZooKeeper

To replicate data and execute distributed queries in ADQM through ZooKeeper, you can:

-

use an external ZooKeeper cluster;

-

install ZooKeeper as a service of the ADQM cluster.

External ZooKeeper

-

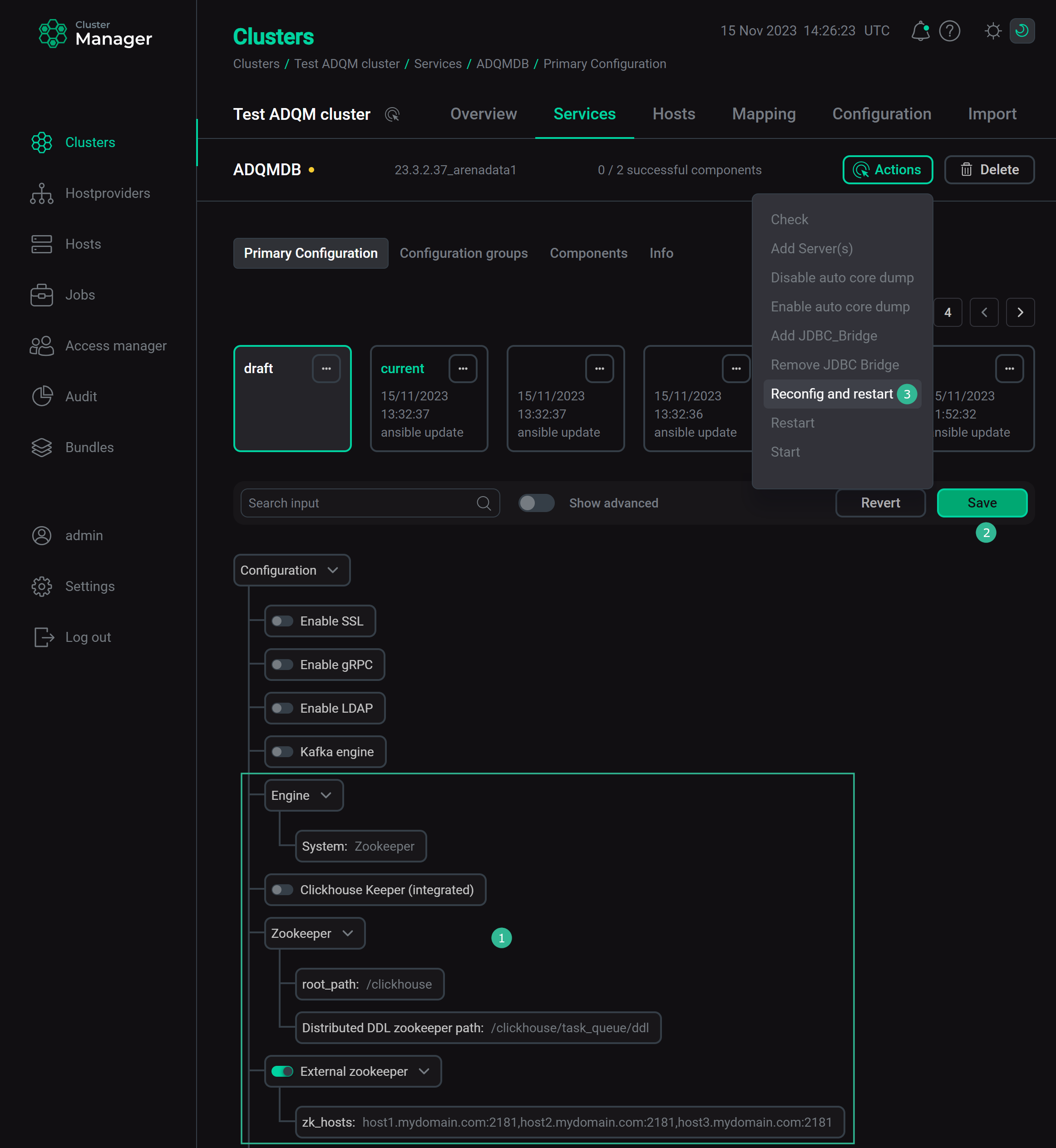

On the ADQMDB service’s configuration page of the ADCM interface, perform the following:

-

activate the External zookeeper option and list all hosts of the ZooKeeper ensemble (indicating ports through which they communicate) separated by commas in the zk_hosts field;

-

specify paths to ZooKeeper nodes in the Zookeeper section;

-

set

Zookeeperas the Coordination system parameter value.

-

-

Click Save and execute the Reconfig action for the ADQMDB service to use the external ZooKeeper as a coordination service for the ADQM cluster.

ZooKeeper as a service of ADQM

ADQM provides a special service that you can add and install on a cluster to coordinate replication and query distribution through ZooKeeper. To do this, perform the following steps in the ADCM interface:

-

Add the Zookeeper service to the ADQM cluster.

-

Install the Zookeeper Server component to the odd number of hosts.

-

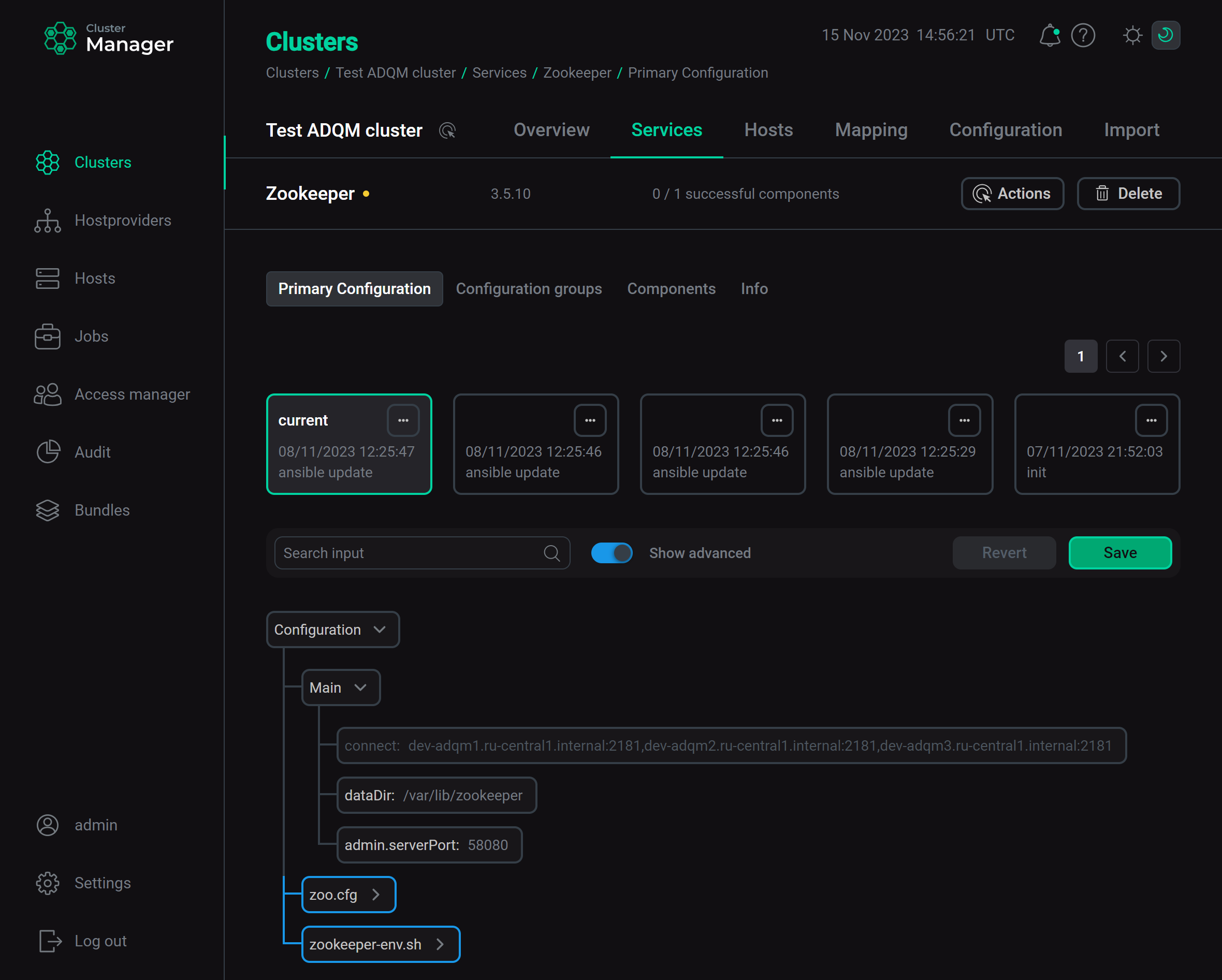

Configure the Zookeeper service (see descriptions of parameters available for the service in the corresponding section of the Configuration parameters article).

Set up the Zookeeper service

Set up the Zookeeper serviceClick Save to save settings.

-

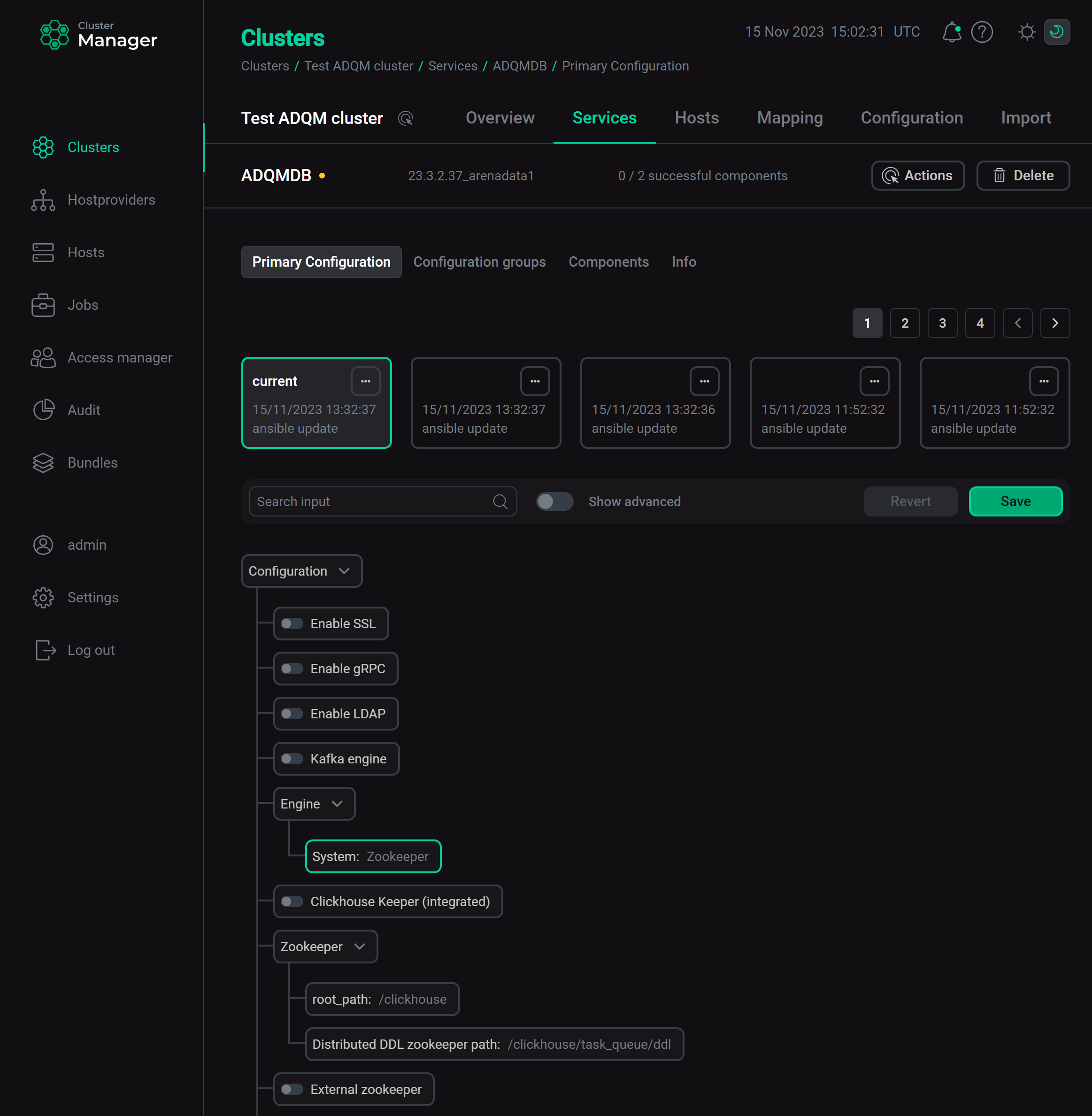

On the ADQMDB service configuration page, set

Zookeeperas the Coordination system parameter value. Enable the Zookeeper service

Enable the Zookeeper serviceConfigure also paths to ZooKeeper nodes in the Zookeeper section if needed.

-

Click Save and execute the Reconfig action for the ADQMDB service to use the installed ZooKeeper as a coordination service for the ADQM cluster.

|

NOTE

|

Test replicated and distributed tables

Configure ADQM cluster with ZooKeeper

-

Configure a test ADQM cluster — two shards with two replicas in each one (hosts used in this example are host-1, host-2, host-3, and host-4). To learn how to do this via the ADCM interface, read Configure logical clusters in the ADCM interface. In this case, all necessary sections described below are added to the configuration file of each server automatically.

-

Logical cluster configuration

In the config.xml file, the cluster configuration is defined in the

remote_serverssection:<remote_servers> <default_cluster> <shard> <internal_replication>true</internal_replication> <weight>1</weight> <replica> <host>host-1</host> <port>9000</port> </replica> <replica> <host>host-2</host> <port>9000</port> </replica> </shard> <shard> <internal_replication>true</internal_replication> <weight>1</weight> <replica> <host>host-3</host> <port>9000</port> </replica> <replica> <host>host-4</host> <port>9000</port> </replica> </shard> </default_cluster> </remote_servers>In this example, a ReplicatedMergeTree table is used, so the

internal_replicationparameter is set totruefor each shard — in this case, the replicated table manages data replication itself (data is written to any available replica, and another replica receives data in the asynchronous mode). If using regular tables, set theinternal_replicationparameter tofalse— in this case, data is replicated by a Distributed table (data is written to all replicas of a shard). -

Macros

Each server configuration should also contain the

macrossection, which defines the shard and replica identifiers to be replaced with values corresponding to a specific host when creating replicated tables on the cluster (ON CLUSTER). For example, macros for host-1 contained in thedefault_clustercluster:<macros> <replica>1</replica> <shard>1</shard> </macros>If you configure a logical cluster in the ADCM interface via the Cluster Configuration setting and specify the cluster name, for example,

abc, ADQM will write macros to config.xml as follows (for host-1 in theabccluster with the same topology as provided above):<macros> <abc_replica>1</abc_replica> <abc_shard>1</abc_shard> </macros>In this case, use the

{abc_shard}and{abc_replica}variables within ReplicatedMergeTree parameters when creating replicated tables.

-

-

Install the Zookeeper service to three hosts (host-1, host-2, host-3). After that, the following sections will be added to the config.xml file:

-

zookeeper— list of ZooKeeper nodes; -

distributed_ddl— path in ZooKeeper to the queue with DDL queries (if multiple clusters use the same ZooKeeper, this path should be unique for each cluster).

<zookeeper> <node> <host>host-1</host> <port>2181</port> </node> <node> <host>host-2</host> <port>2181</port> </node> <node> <host>host-3</host> <port>2181</port> </node> <root>/clickhouse</root> </zookeeper> <distributed_ddl> <path>/clickhouse/task_queue/ddl</path> </distributed_ddl> -

Replicated tables

-

Execute the following query on one of the hosts (for example, on host-1) to create a replicated table:

CREATE TABLE test_local ON CLUSTER default_cluster (id Int32, value_string String) ENGINE = ReplicatedMergeTree('/clickhouse/tables/{shard}/test_local', '{replica}') ORDER BY id;This query creates the

test_localtable on all hosts of thedefault_clustercluster. -

Insert data into the table on host-2:

INSERT INTO test_local VALUES (1, 'a'); -

Make sure data is replicated. To do this, select data from the

test_localtable on host-1:SELECT * FROM test_local;If replication works correctly, the table on host-1 will automatically receive data written to the replica on host-2:

┌─id─┬─value_string─┐ │ 1 │ a │ └────┴──────────────┘

Distributed tables

Note that the SELECT query returns data only from a table on a host on which the query is being run.

Insert data into any replica on the second shard (for example, into a table on host-3):

INSERT INTO test_local VALUES (2, 'b');Repeat the SELECT query on host-1 or host-2 — the resulting selection will not include data from the second shard:

┌─id─┬─value_string─┐ │ 1 │ a │ └────┴──────────────┘

You can use distributed tables to get data from all shards (for details, see the Distributed tables section).

-

Create a table using the Distributed engine:

CREATE TABLE test_distr ON CLUSTER default_cluster AS default.test_local ENGINE = Distributed(default_cluster, default, test_local, rand()); -

Run the following query on any host:

SELECT * FROM test_distr;The output includes data from both shards:

┌─id─┬─value_string─┐ │ 1 │ a │ └────┴──────────────┘ ┌─id─┬─value_string─┐ │ 2 │ b │ └────┴──────────────┘