Debezium сonnector for MS SQL Server

The article shows an example of running and using the Debezium connector for MS SQL Server via ADS Control. The connector is designed to create records in Kafka topics about events of changes against database tables on Microsoft SQL Server.

Prerequisites

The following environment was used to create the connector:

-

The ADS cluster is installed according to the Online installation guide. The minimum ADS version is 3.6.2.2.b1.

-

Kafka and Kafka Connect services have been installed to the ADS cluster.

-

To automatically create a Kafka topic, the auto.create.topics.enable parameter is enabled in the server.properties group when configuring the Kafka service.

-

The ADS Control cluster is installed according to the guide Get started with Arenadata Streaming Control and integrated with the ADS cluster being used.

-

MS SQL Server is installed on the host with the Ubuntu 20.04 operating system.

p@ssword123!password is set for thesauser,testDB1database anddbo.customerstable are created undersauser. -

MS SQL Server IP address is

10.92.40.239. For incoming connections, the default port number is1433. -

To allow the Debezium connector to capture change event records for database operations, enable change data capture (CDC):

-

for table, changes to which should be captured by the connector.

Create a Debezium connector for MS SQL Server

To create a Debezium connector for MS SQL Server via ADS Control, use the SqlServerConnector connector plugin.

To create connectors using the ADS Control, you need to:

-

Go to the Kafka Connects page in the ADS Control web interface. The Kafka Connects page becomes available after selecting a cluster in the cluster management section and going to the desired tab on the General page.

-

Select the desired cluster and go to the Kafka Connect instance overview page.

-

Click Create Connector on the Kafka Connect instance overview page. After clicking Create Connector, the window for selecting the connector plugin Clusters → <cluster name> → Kafka Connects → <cluster name> connector → Kafka connector plugins opens.

-

Select the desired connector to create.

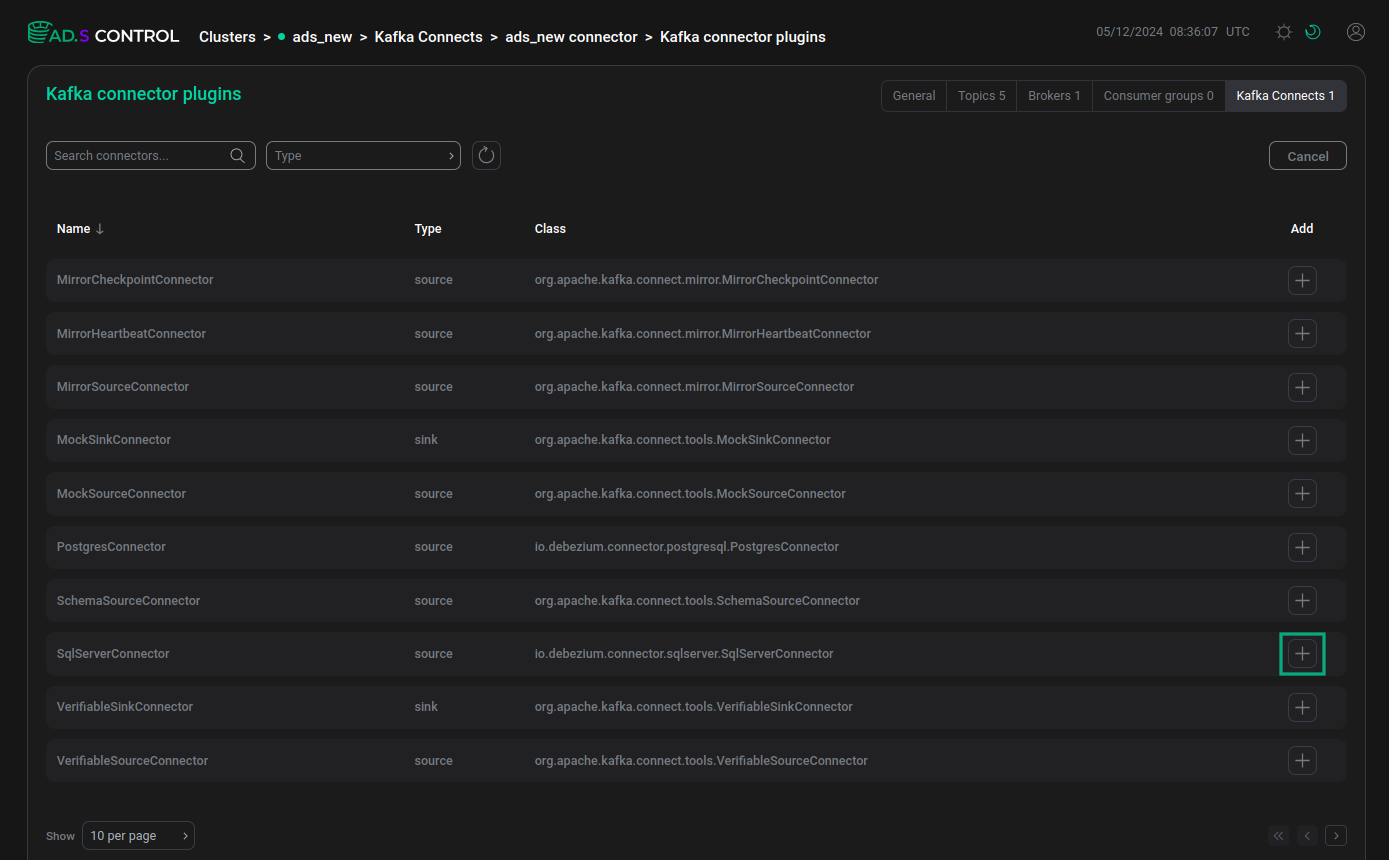

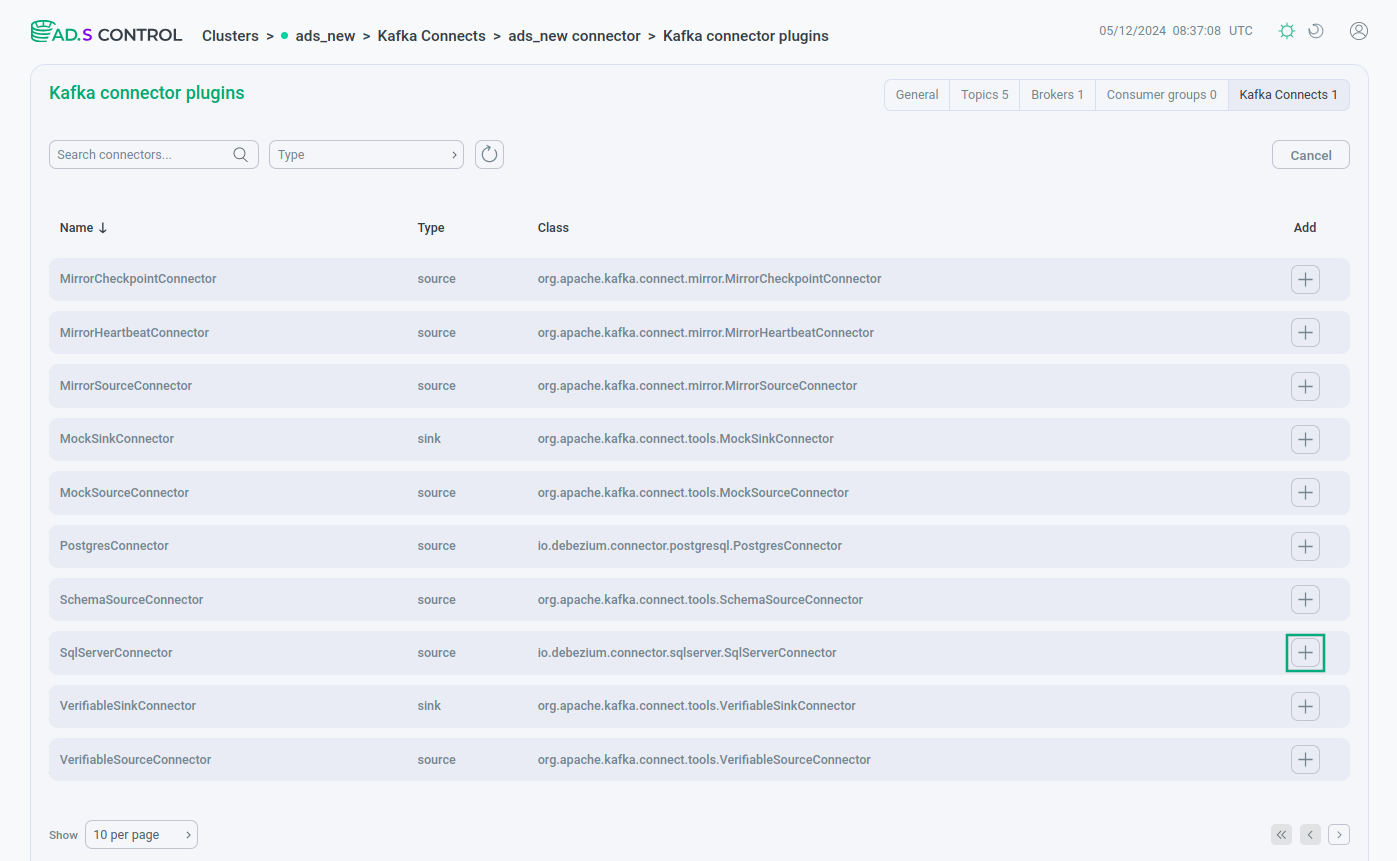

Selecting the Kafka Connect connector to create

Selecting the Kafka Connect connector to create Selecting the Kafka Connect connector to create

Selecting the Kafka Connect connector to create -

Fill in the connector configuration parameters. If necessary, use the parameter information:

-

Kafka Connect service configurations in the ADS configuration parameters article;

You can fill in the configuration in the form of a JSON file. To do this, enable the JSON view switch.

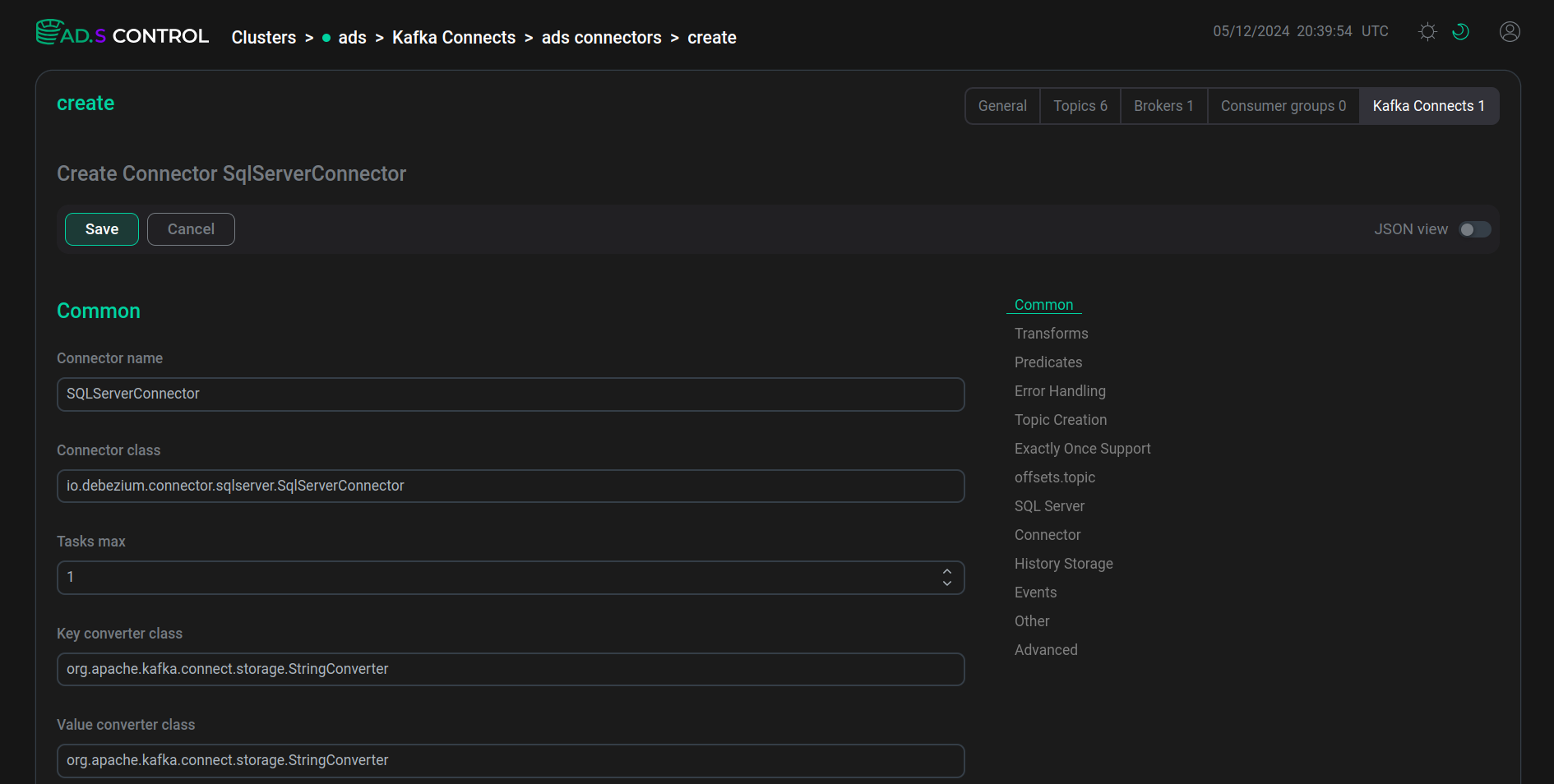

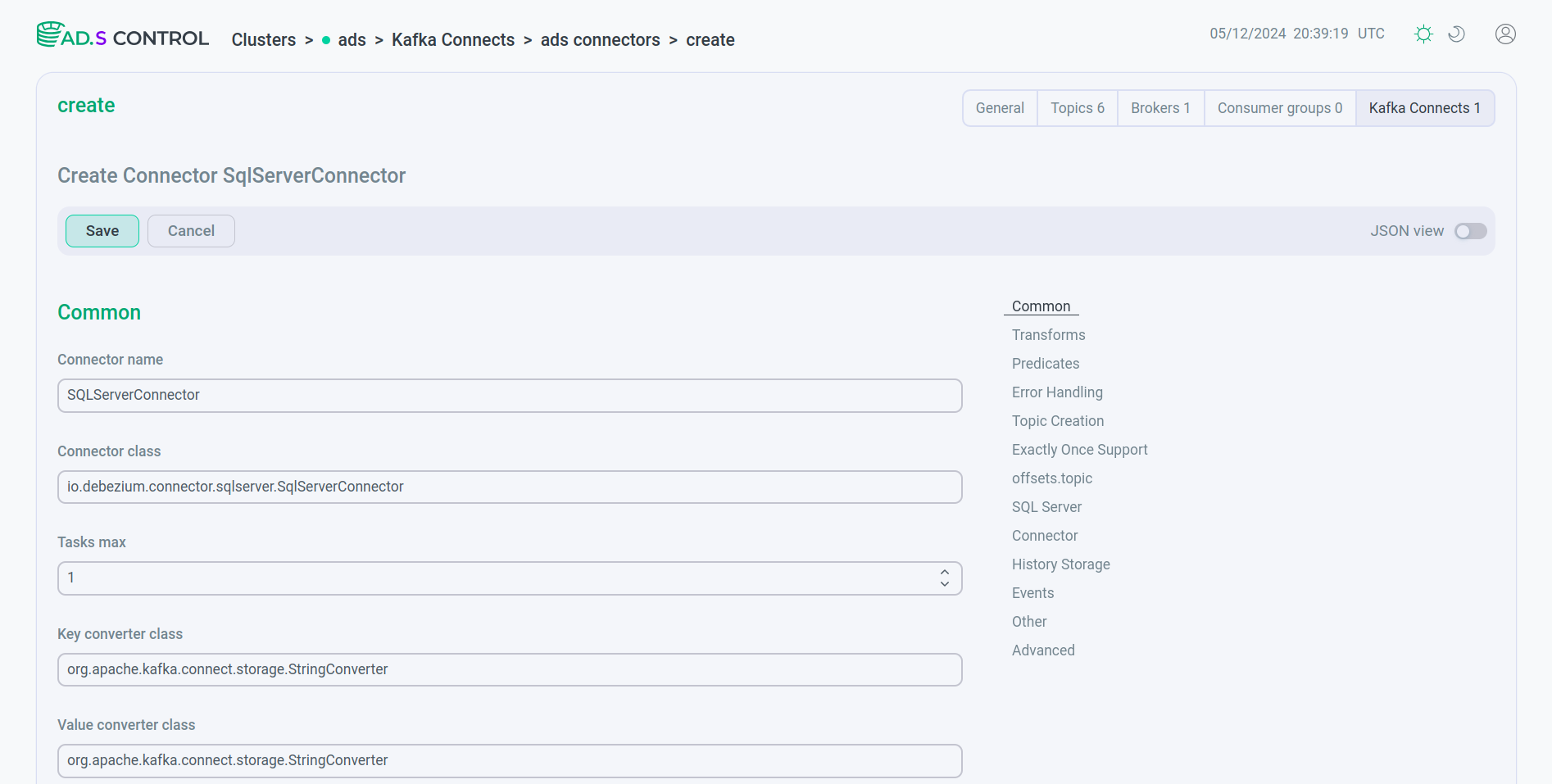

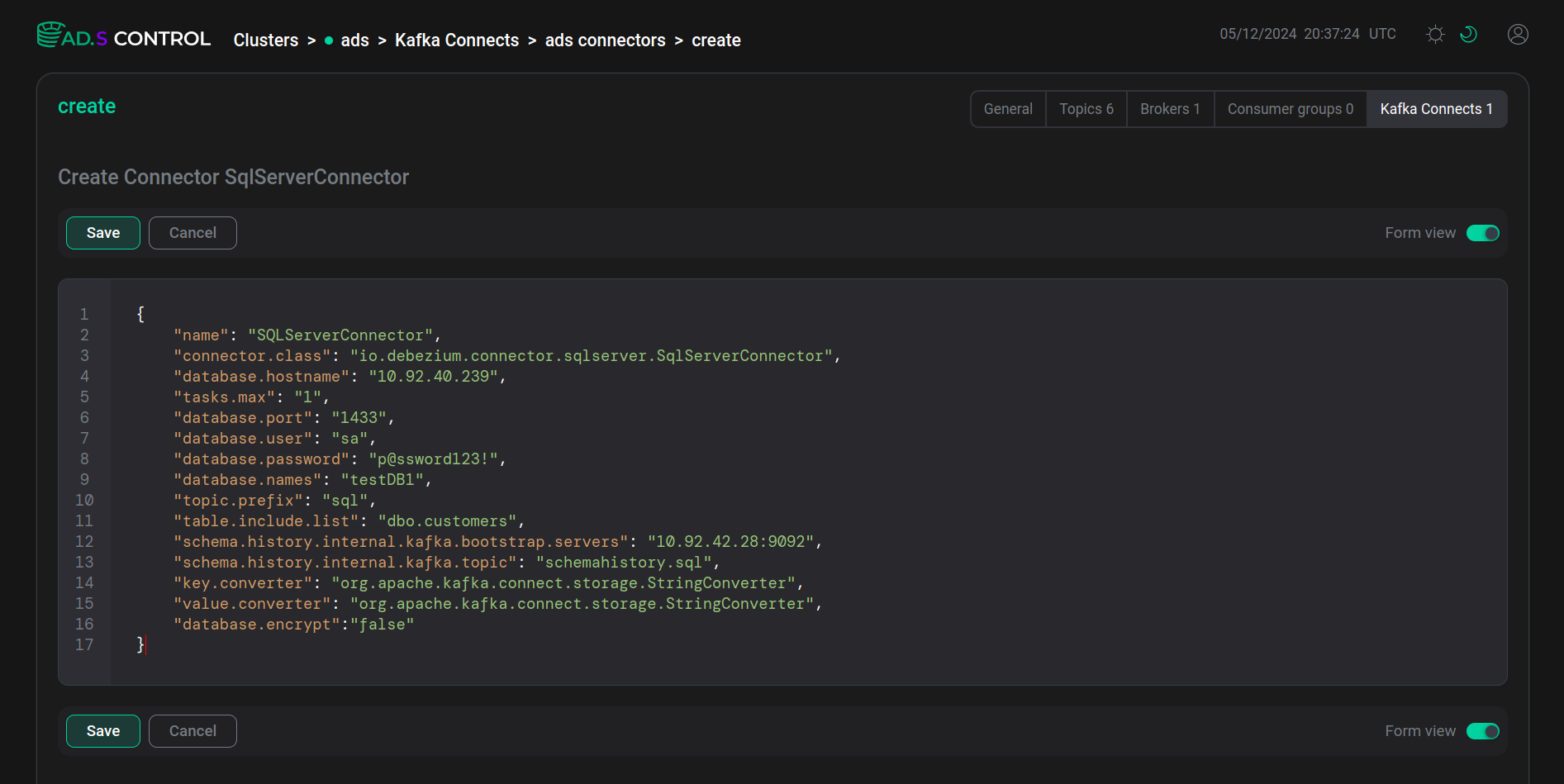

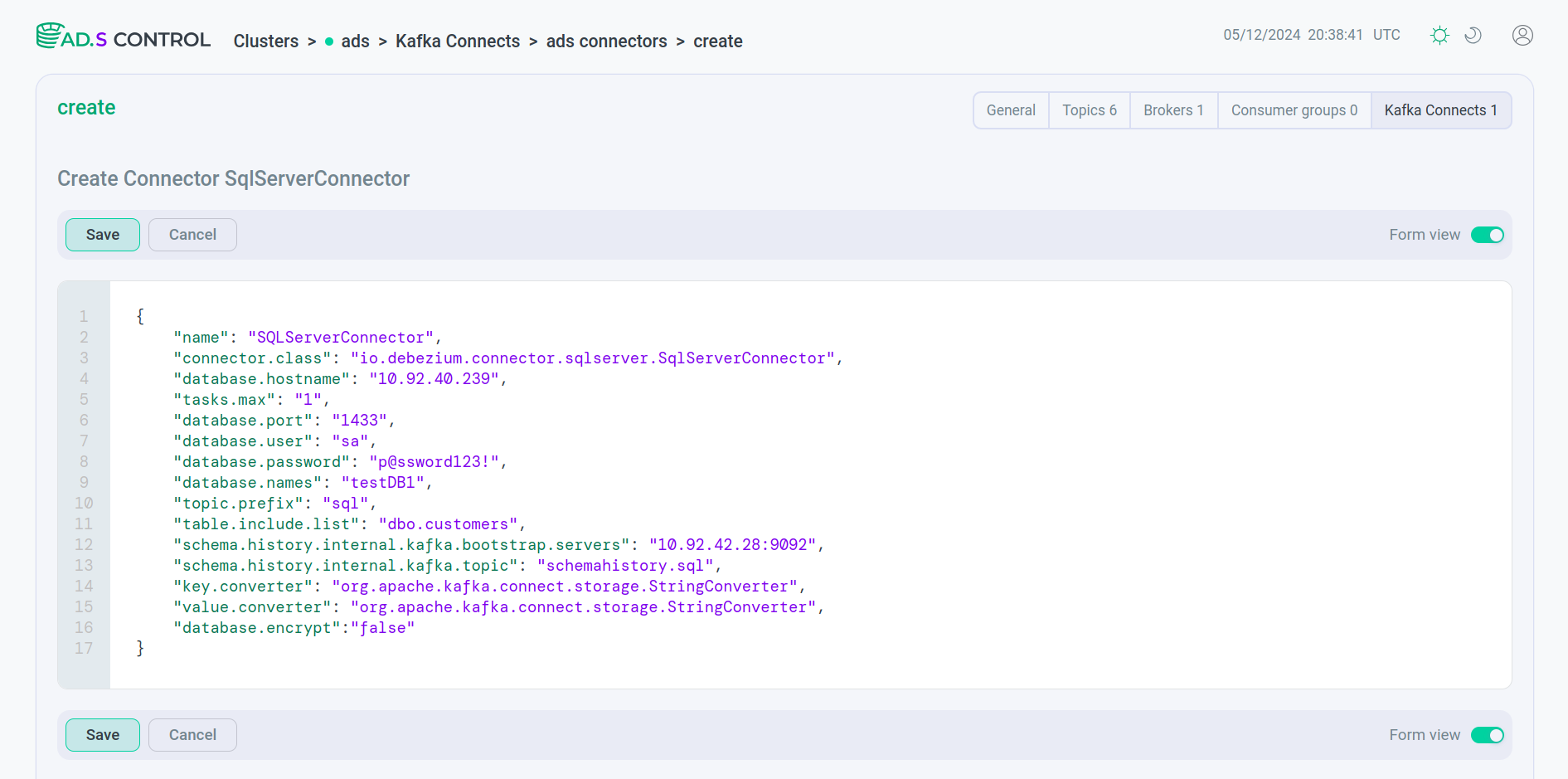

Connector configuration

Connector configuration Connector configuration

Connector configuration Connector configuration JSON file

Connector configuration JSON file Connector configuration JSON fileExample contents of a JSON file with a simple Debezium connector configuration for MS SQL Server

Connector configuration JSON fileExample contents of a JSON file with a simple Debezium connector configuration for MS SQL Server{ "name": "SQLServerConnector", "connector.class": "io.debezium.connector.sqlserver.SqlServerConnector", "database.hostname": "10.92.40.239", "tasks.max": 1, "database.port": 1433, "database.user": "sa", "database.password": "p@ssword123!", "database.names": ["testDB1"], "topic.prefix": "sql", "table.include.list": ["dbo.customers"], "schema.history.internal.kafka.bootstrap.servers": "10.92.42.28:9092", "schema.history.internal.kafka.topic": "schemahistory.sql", "key.converter": "org.apache.kafka.connect.storage.StringConverter", "value.converter": "org.apache.kafka.connect.storage.StringConverter", "database.encrypt":"false" }Attribute Description name

Name of the connector that will be used in the Kafka Connect service

connector.class

Class name for the connector

database.hostname

SQL Server instance address

database.port

SQL Server instance port number

database.user

SQL Server username

database.password

Password for SQL Server user

database.names

Name of the database from which changes will be captured

topic.prefix

Topic prefix for an SQL Server instance/cluster that forms a namespace and is used in all Kafka topic names that the connector writes to, as well as Kafka Connect schema names, and the corresponding Avro schema namespaces when using the Avro Converter

table.include.list

List of all tables whose changes should be recorded by Debezium

schema.history.internal.kafka.bootstrap.servers

List of Kafka brokers that this connector will use to write and restore DDL statements to the database schema history topic

schema.history.internal.kafka.topic

Name of the database schema history section in which the connector will write and restore DDL statements. This topic is for internal use only and should not be used by consumers

key.converter

Converter type for message key

value.converter

Converter type for message value

database.encrypt

Disables connection encryption when specified as

false(encryption is enabled by default in MS SQL Server version 18 and later).If encryption is not disabled, the following parameters must be specified:

database.ssl.truststoreanddatabase.ssl.truststore.password -

-

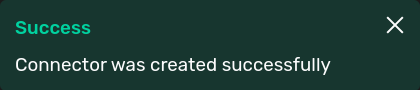

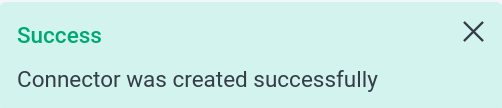

After filling, click Save and get a message about the successful creation of the connector.

Message about the successful creation of the connector

Message about the successful creation of the connector Message about the successful creation of the connector

Message about the successful creation of the connector -

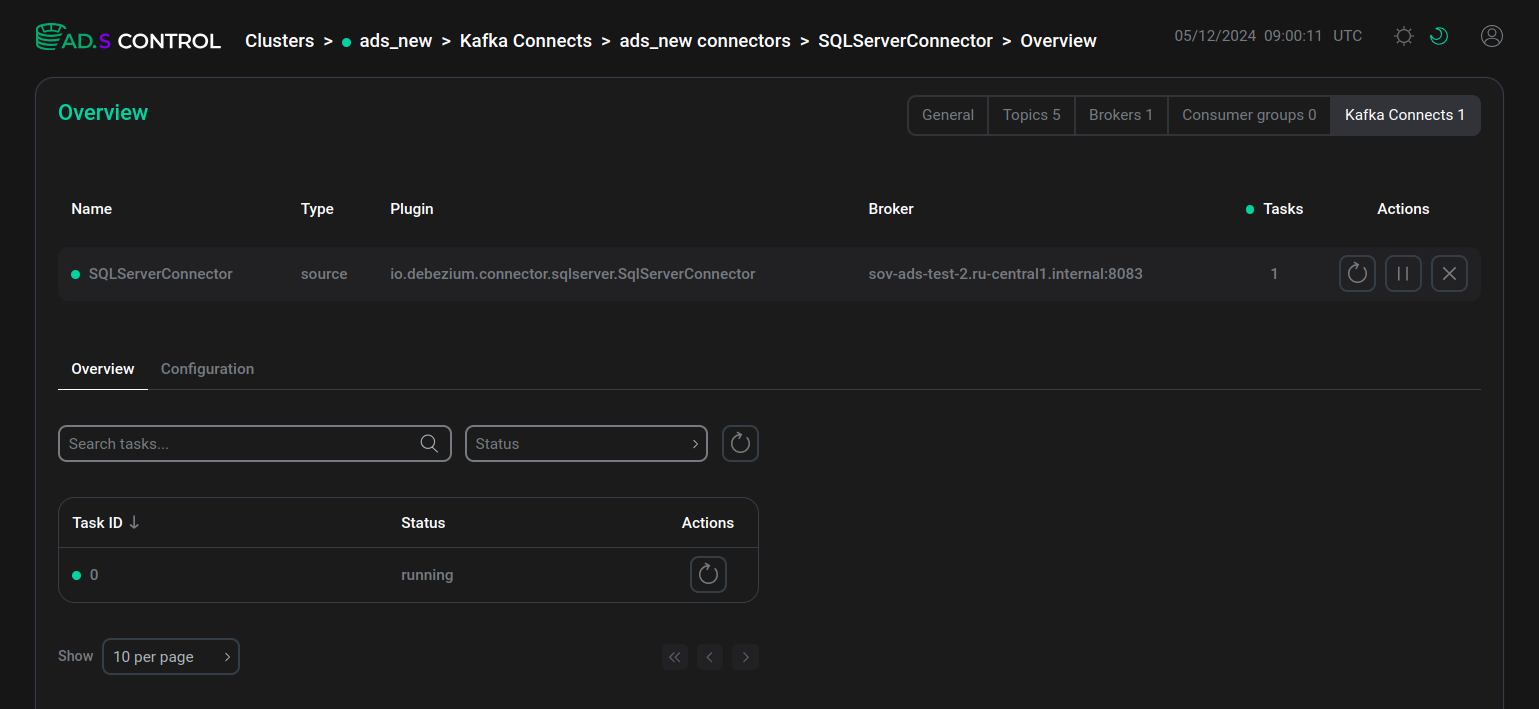

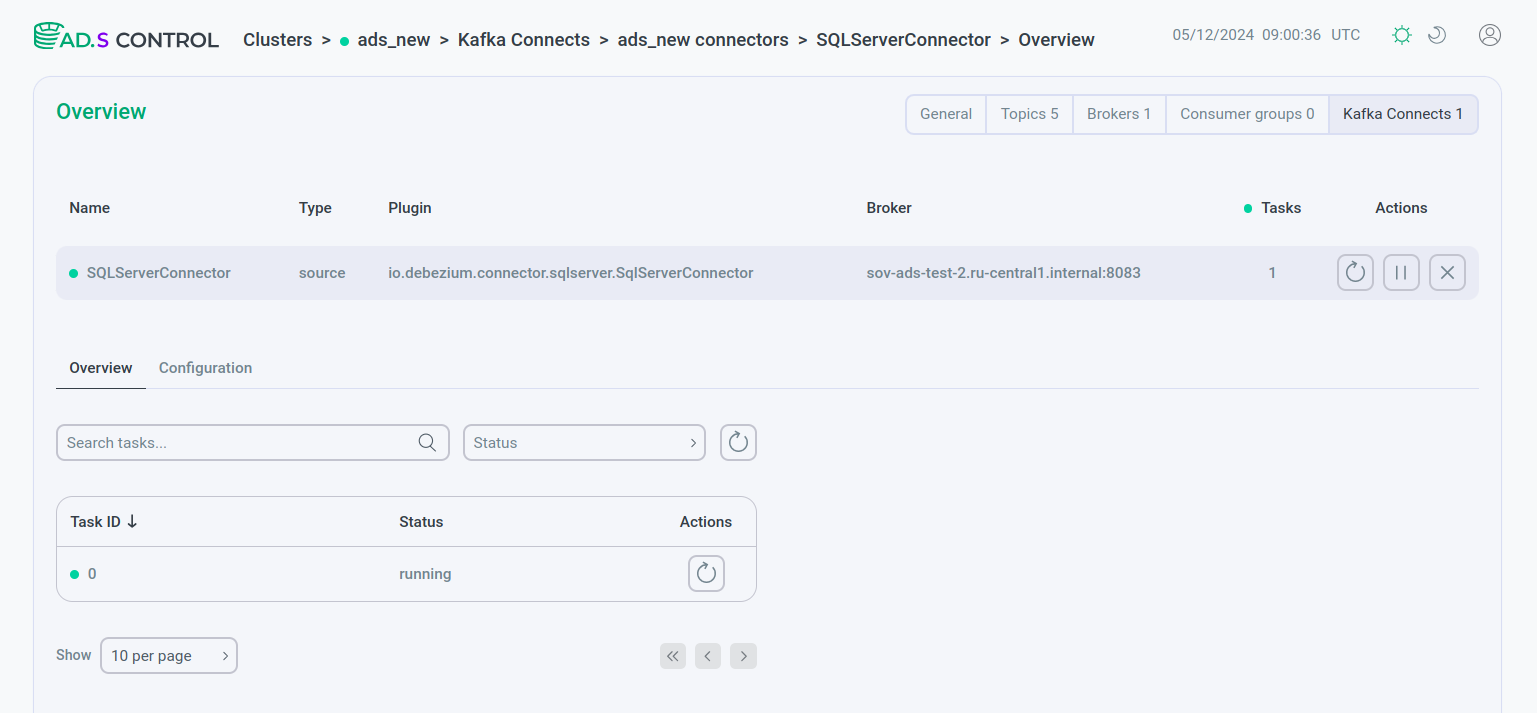

Check that the opened page displays the created connector in the working status.

Created connector

Created connector Created connector

Created connectorIf after creating the connector the task is created with an error, the contents of the error can be seen after clicking

located in the Status field of the task.

Use the Debezium Connector for MS SQL Server

The first time the Debezium connector connects to an SQL Server database, it creates a consistent snapshot of the database schemas.

After the initial snapshot is taken, the connector continuously commits row-level changes for INSERT, UPDATE, or DELETE operations. The connector creates events for each data change operation and transmits them to Kafka topics.

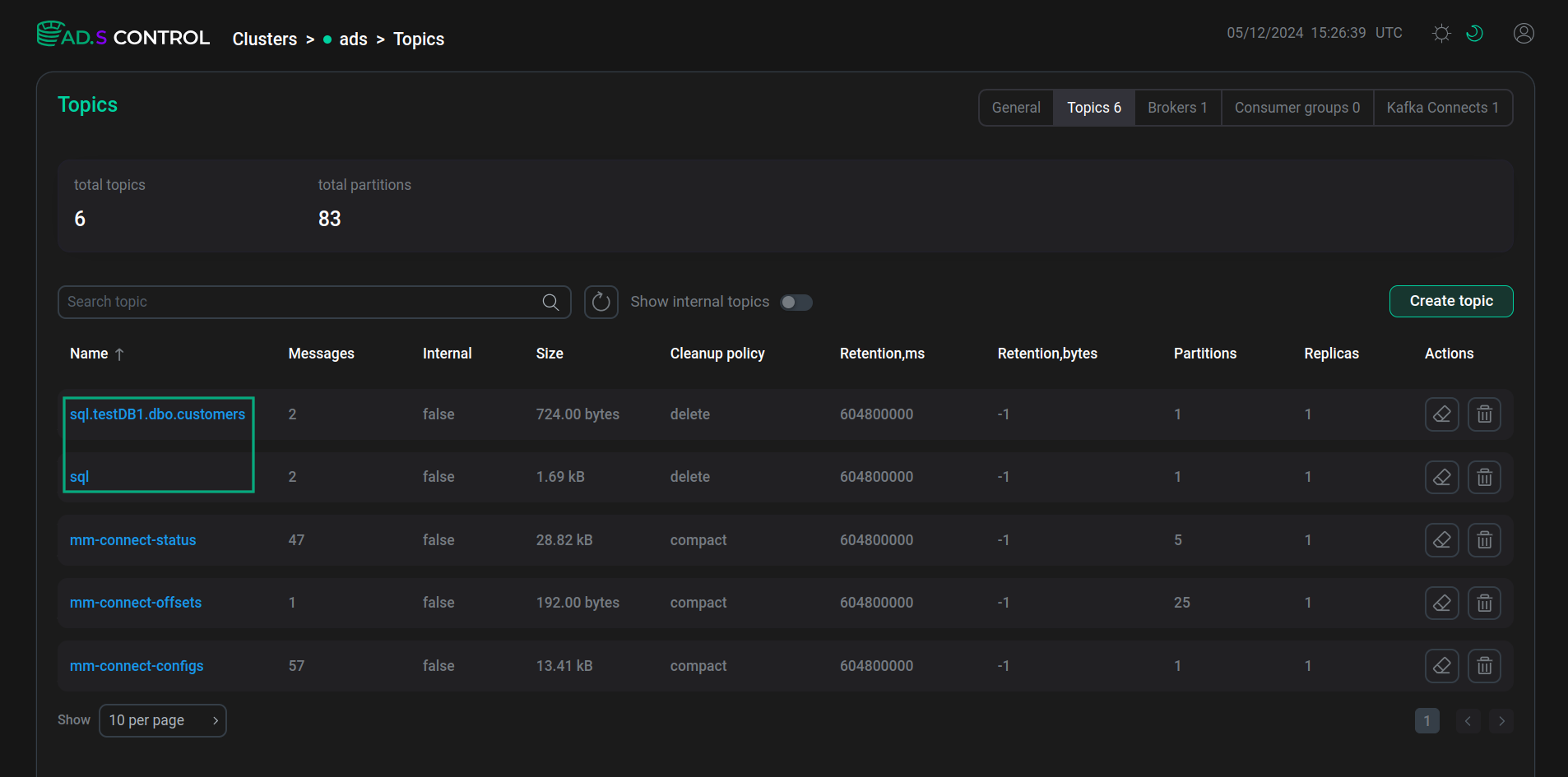

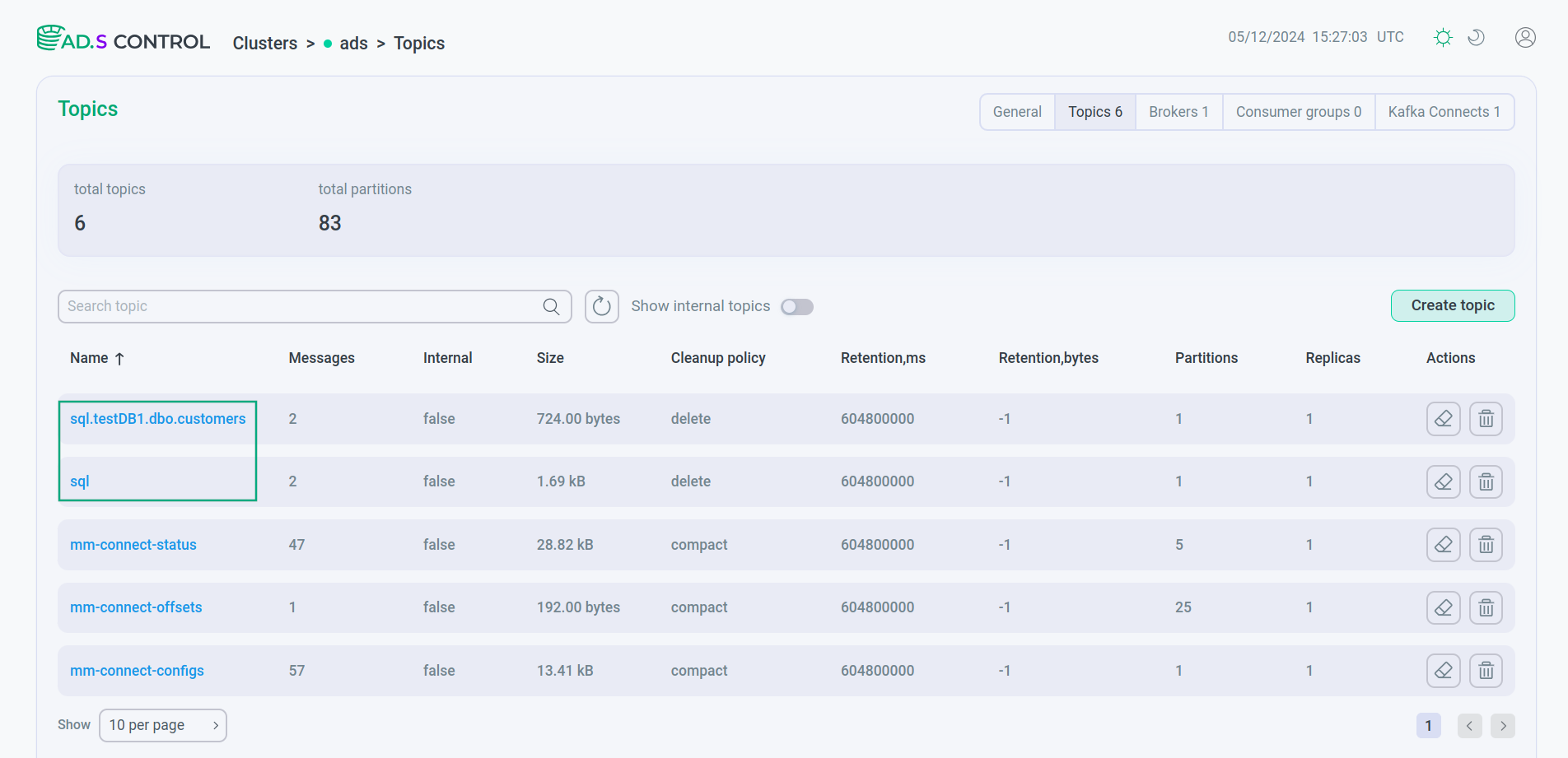

On the Topics page of the ADS Control user interface, you can see the topics created by the connector.

Topic for recording change events

For each table, the connector writes events for all INSERT, UPDATE, and DELETE operations to a separate Kafka topic, in the case of the example above — the sql.testDB1.dbo.customers topic.

The connector uses the following form of the topic name to record change events: <topicPrefix>.<schemaName>.<tableName>, where:

-

<topicPrefix>— the logical name of the server specified in thetopic.prefixconfiguration property; -

<schemaName>— the name of the database schema in which the change event occurred; -

<tableName>— the name of the database table in which the change event occurred.

Topic for recording schema changes

For each table that has CDC enabled, the Debezium connector maintains a history of schema change events that are applied to the tables in the database. The connector writes schema change events to a separate Kafka topic, in the case of the example given — sql. This name is the same as the topicPrefix parameter specified when creating the connector.

|

NOTE

More detailed information about the topic names created by the Debezium connector can be found in the Topic names section of the Debezium Connector for MS SQL Server documentation. |

snapshot.mode

Using the snapshot.mode parameter, you can configure the moment at which the connector takes snapshots.